## 添加標籤monitoring=enabled

kubectl label node k8s-master71u monitoring=enabled

kubectl label node k8s-master72u monitoring=enabled

kubectl label node k8s-master73u monitoring=enabled

## 佈屬時,修改helm yaml檔

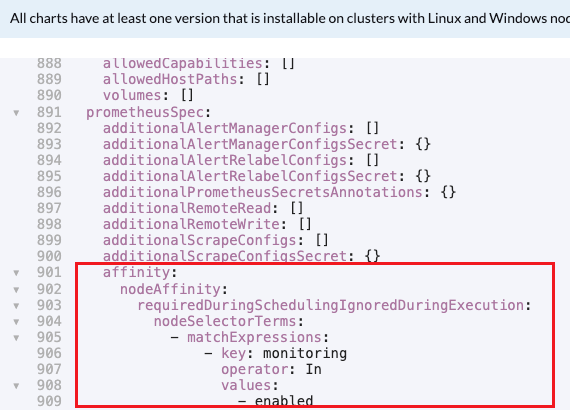

prometheusSpec:

additionalAlertManagerConfigs: []

additionalAlertManagerConfigsSecret: {}

additionalAlertRelabelConfigs: []

additionalAlertRelabelConfigsSecret: {}

additionalPrometheusSecretsAnnotations: {}

additionalRemoteRead: []

additionalRemoteWrite: []

additionalScrapeConfigs: []

additionalScrapeConfigsSecret: {}

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: monitoring

operator: In

values:

- enabled

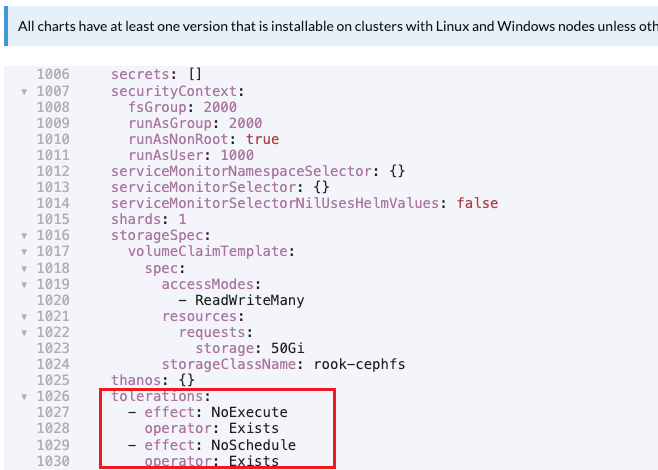

storageSpec:

volumeClaimTemplate:

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 50Gi

storageClassName: rook-cephfs

thanos: {}

tolerations:

- effect: NoExecute

operator: Exists

- effect: NoSchedule

operator: Exists

## Prometheus調度到master了,但是狀態都是Init:0/1

root@k8s-master71u:~# kubectl get pod -n cattle-monitoring-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alertmanager-rancher-monitoring-alertmanager-0 2/2 Running 0 18m 10.201.14.153 k8s-node75u <none> <none>

prometheus-rancher-monitoring-prometheus-0 0/3 Init:0/1 0 2m24s <none> k8s-master71u <none> <none>

rancher-monitoring-grafana-568d4fc6d5-cvw6z 4/4 Running 0 18m 10.201.255.201 k8s-node76u <none> <none>

rancher-monitoring-kube-state-metrics-56b4477cc-gp4lz 1/1 Running 0 18m 10.201.255.214 k8s-node76u <none> <none>

rancher-monitoring-operator-679b99d785-8x79c 1/1 Running 0 18m 10.201.14.154 k8s-node75u <none> <none>

rancher-monitoring-prometheus-adapter-7494f789f6-kgjdv 1/1 Running 0 18m 10.201.14.140 k8s-node75u <none> <none>

rancher-monitoring-prometheus-node-exporter-gq66p 1/1 Running 0 18m 192.168.1.72 k8s-master72u <none> <none>

rancher-monitoring-prometheus-node-exporter-jvdc2 1/1 Running 0 18m 192.168.1.76 k8s-node76u <none> <none>

rancher-monitoring-prometheus-node-exporter-nmqq2 1/1 Running 0 18m 192.168.1.73 k8s-master73u <none> <none>

rancher-monitoring-prometheus-node-exporter-rcldf 1/1 Running 0 18m 192.168.1.75 k8s-node75u <none> <none>

rancher-monitoring-prometheus-node-exporter-rk6pz 1/1 Running 0 18m 192.168.1.71 k8s-master71u <none> <none>

## 查看原因

root@k8s-master71u:~# kubectl describe pod prometheus-rancher-monitoring-prometheus-0 -n cattle-monitoring-system

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m39s default-scheduler Successfully assigned cattle-monitoring-system/prometheus-rancher-monitoring-prometheus-0 to k8s-master71u

Warning FailedMount 112s kubelet MountVolume.MountDevice failed for volume "pvc-972b866f-bc9a-42e0-a34a-0f2a0aa8acac" : kubernetes.io/csi: attacher.MountDevice failed to create newCsiDriverClient: driver name rook-ceph.cephfs.csi.ceph.com not found in the list of registered CSI drivers

Warning FailedMount 37s kubelet Unable to attach or mount volumes: unmounted volumes=[prometheus-rancher-monitoring-prometheus-db], unattached volumes=[prometheus-rancher-monitoring-prometheus-db config kube-api-access-85ljn prometheus-nginx tls-assets nginx-home config-out prometheus-rancher-monitoring-prometheus-rulefiles-0 web-config]: timed out waiting for the condition

參考網址: https://www.cnblogs.com/lswweb/p/13860186.html

查看現況csidrivers

root@k8s-master71u:~# kubectl get csinodes

NAME DRIVERS AGE

k8s-master71u 0 106d

k8s-master72u 0 106d

k8s-master73u 0 106d

k8s-node75u 2 106d

k8s-node76u 2 106d

root@k8s-master71u:~# kubectl get csidrivers

NAME ATTACHREQUIRED PODINFOONMOUNT STORAGECAPACITY TOKENREQUESTS REQUIRESREPUBLISH MODES AGE

rook-ceph.cephfs.csi.ceph.com true false false <unset> false Persistent 91d

rook-ceph.rbd.csi.ceph.com true false false <unset> false Persistent 91d

root@k8s-master71u:~/rook/deploy/examples# kubectl get pod -n rook-ceph -o wide | grep csi

csi-cephfsplugin-7b5fj 2/2 Running 10 (24h ago) 91d 192.168.1.76 k8s-node76u <none> <none>

csi-cephfsplugin-k2xgp 2/2 Running 10 (24h ago) 91d 192.168.1.75 k8s-node75u <none> <none>

csi-cephfsplugin-provisioner-668dfcf95b-qbfpz 5/5 Running 27 (24h ago) 91d 10.201.14.191 k8s-node75u <none> <none>

csi-cephfsplugin-provisioner-668dfcf95b-xvg52 5/5 Running 25 (24h ago) 91d 10.201.255.236 k8s-node76u <none> <none>

csi-rbdplugin-ddxqm 2/2 Running 10 (24h ago) 91d 192.168.1.75 k8s-node75u <none> <none>

csi-rbdplugin-dlv8p 2/2 Running 10 (24h ago) 91d 192.168.1.76 k8s-node76u <none> <none>

csi-rbdplugin-provisioner-5b78f67bbb-76qjd 5/5 Running 27 (24h ago) 91d 10.201.14.188 k8s-node75u <none> <none>

csi-rbdplugin-provisioner-5b78f67bbb-p8jj8 5/5 Running 25 (24h ago) 91d 10.201.255.204 k8s-node76u <none> <none>

添加容忍污點將csidrivers也佈屬到master節點

root@k8s-master71u:~/rook/deploy/examples# vim operator.yaml

# (Optional) CephCSI provisioner NodeAffinity (applied to both CephFS and RBD provisioner).

# CSI_PROVISIONER_NODE_AFFINITY: "role=storage-node; storage=rook, ceph"

# (Optional) CephCSI provisioner tolerations list(applied to both CephFS and RBD provisioner).

# Put here list of taints you want to tolerate in YAML format.

# CSI provisioner would be best to start on the same nodes as other ceph daemons.

CSI_PROVISIONER_TOLERATIONS: |

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

operator: Exists

- effect: NoExecute

key: node-role.kubernetes.io/etcd

operator: Exists

# (Optional) CephCSI plugin NodeAffinity (applied to both CephFS and RBD plugin).

# CSI_PLUGIN_NODE_AFFINITY: "role=storage-node; storage=rook, ceph"

# (Optional) CephCSI plugin tolerations list(applied to both CephFS and RBD plugin).

# Put here list of taints you want to tolerate in YAML format.

# CSI plugins need to be started on all the nodes where the clients need to mount the storage.

CSI_PLUGIN_TOLERATIONS: |

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

operator: Exists

- effect: NoExecute

key: node-role.kubernetes.io/etcd

operator: Exists

root@k8s-master71u:~/rook/deploy/examples# kubectl apply -f operator.yaml

root@k8s-master71u:~/rook/deploy/examples# kubectl get pod -n rook-ceph -o wide | grep csi

csi-cephfsplugin-2cj79 2/2 Running 0 5m29s 192.168.1.72 k8s-master72u <none> <none>

csi-cephfsplugin-b4jcd 2/2 Running 0 5m29s 192.168.1.71 k8s-master71u <none> <none>

csi-cephfsplugin-cmfvm 2/2 Running 0 117s 192.168.1.75 k8s-node75u <none> <none>

csi-cephfsplugin-ldml2 2/2 Running 0 114s 192.168.1.76 k8s-node76u <none> <none>

csi-cephfsplugin-provisioner-75d8bf7fc5-69rw9 5/5 Running 0 5m25s 10.201.255.235 k8s-node76u <none> <none>

csi-cephfsplugin-provisioner-75d8bf7fc5-vnqzc 5/5 Running 0 5m25s 10.201.14.162 k8s-node75u <none> <none>

csi-cephfsplugin-vbxv2 2/2 Running 0 5m29s 192.168.1.73 k8s-master73u <none> <none>

csi-rbdplugin-8glkf 2/2 Running 0 113s 192.168.1.75 k8s-node75u <none> <none>

csi-rbdplugin-d2cbd 2/2 Running 0 5m29s 192.168.1.73 k8s-master73u <none> <none>

csi-rbdplugin-ltlzk 2/2 Running 0 117s 192.168.1.76 k8s-node76u <none> <none>

csi-rbdplugin-msj62 2/2 Running 0 5m29s 192.168.1.72 k8s-master72u <none> <none>

csi-rbdplugin-provisioner-5f74ff45d5-kfg9c 5/5 Running 0 5m26s 10.201.255.249 k8s-node76u <none> <none>

csi-rbdplugin-provisioner-5f74ff45d5-sq2ph 5/5 Running 0 5m26s 10.201.14.161 k8s-node75u <none> <none>

csi-rbdplugin-tzsbz 2/2 Running 0 5m29s 192.168.1.71 k8s-master71u <none> <none>

root@k8s-master71u:~/rook/deploy/examples# kubectl get csinodes

NAME DRIVERS AGE

k8s-master71u 2 106d

k8s-master72u 2 106d

k8s-master73u 2 106d

k8s-node75u 2 106d

k8s-node76u 2 106d

可以看到Prometheus也成功調度到master了

root@k8s-master71u:~/rook/deploy/examples# kubectl get pod -n cattle-monitoring-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

alertmanager-rancher-monitoring-alertmanager-0 2/2 Running 0 67m 10.201.14.153 k8s-node75u <none> <none>

prometheus-rancher-monitoring-prometheus-0 3/3 Running 0 51m 10.201.133.34 k8s-master71u <none> <none>

rancher-monitoring-grafana-568d4fc6d5-cvw6z 4/4 Running 0 68m 10.201.255.201 k8s-node76u <none> <none>

rancher-monitoring-kube-state-metrics-56b4477cc-gp4lz 1/1 Running 0 68m 10.201.255.214 k8s-node76u <none> <none>

rancher-monitoring-operator-679b99d785-8x79c 1/1 Running 0 68m 10.201.14.154 k8s-node75u <none> <none>

rancher-monitoring-prometheus-adapter-7494f789f6-kgjdv 1/1 Running 0 68m 10.201.14.140 k8s-node75u <none> <none>

rancher-monitoring-prometheus-node-exporter-gq66p 1/1 Running 0 68m 192.168.1.72 k8s-master72u <none> <none>

rancher-monitoring-prometheus-node-exporter-jvdc2 1/1 Running 0 68m 192.168.1.76 k8s-node76u <none> <none>

rancher-monitoring-prometheus-node-exporter-nmqq2 1/1 Running 0 68m 192.168.1.73 k8s-master73u <none> <none>

rancher-monitoring-prometheus-node-exporter-rcldf 1/1 Running 0 68m 192.168.1.75 k8s-node75u <none> <none>

rancher-monitoring-prometheus-node-exporter-rk6pz 1/1 Running 0 68m 192.168.1.71 k8s-master71u <none> <none>