目錄

https://rook.io/docs/rook/latest-release/Getting-Started/intro/

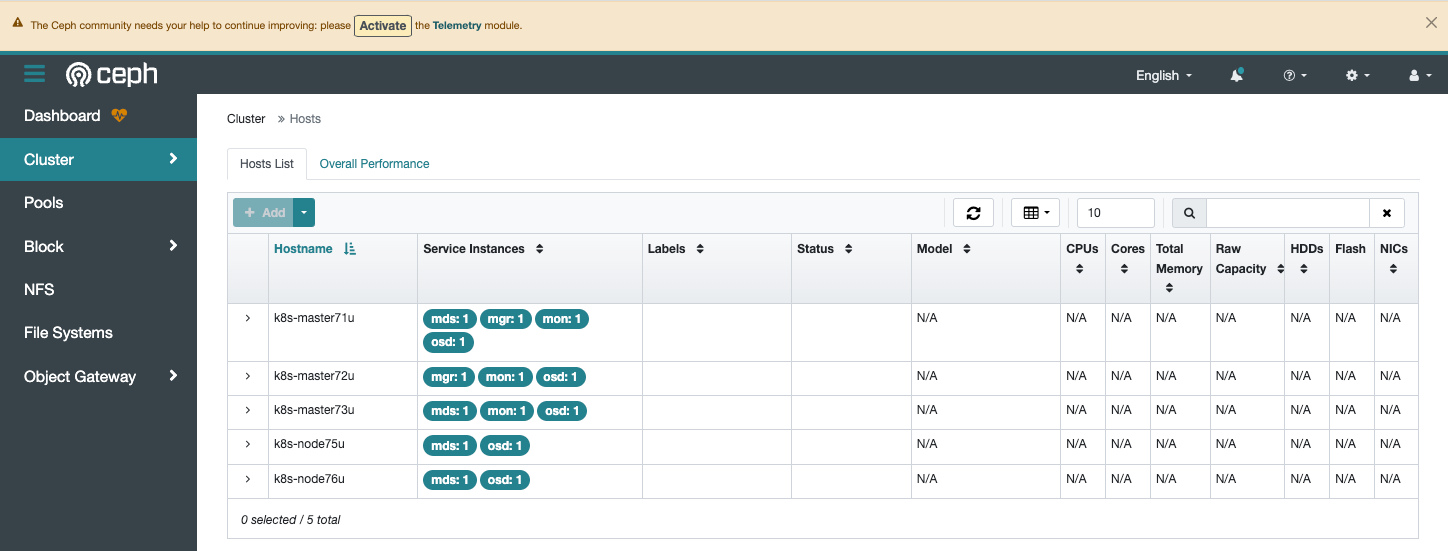

| 節點名稱 | IP | K8S角色 | 組件 | 磁碟 16G |

|---|---|---|---|---|

| k8s-master71u | 192.168.1.71 | master | mon mgr osd mds mds | /dev/sdb |

| k8s-master72u | 192.168.1.72 | master | mon mgr osd mds | /dev/sdb |

| k8s-master73u | 192.168.1.73 | master | mon osd mds | /dev/sdb |

| k8s-node75u | 192.168.1.75 | worker | osd mds | /dev/sdb |

| k8s-node76u | 192.168.1.76 | worker | osd | /dev/sdb |

確認磁碟無格式化過

root@k8s-master71u:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

loop0 7:0 0 63.4M 1 loop /snap/core20/1974

loop1 7:1 0 63.5M 1 loop /snap/core20/2015

loop2 7:2 0 111.9M 1 loop /snap/lxd/24322

loop3 7:3 0 40.8M 1 loop /snap/snapd/20092

loop4 7:4 0 40.9M 1 loop /snap/snapd/20290

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 2G 0 part /boot

└─sda3 8:3 0 48G 0 part

└─ubuntu--vg-root

253:0 0 48G 0 lvm /var/lib/kubelet/pods/ce7174f8-ad8f-4f42-8e58-2abca8c91424/volume-subpaths/tigera-ca-bundle/calico-node/1

/

sdb 8:16 0 16G 0 disk

sr0 11:0 1 1024M 0 rom

root@k8s-master71u:~# lsblk -f

NAME FSTYPE FSVER LABEL UUID FSAVAIL FSUSE% MOUNTPOINTS

loop0

squash 4.0 0 100% /snap/core20/1974

loop1

squash 4.0 0 100% /snap/core20/2015

loop2

squash 4.0 0 100% /snap/lxd/24322

loop3

squash 4.0 0 100% /snap/snapd/20092

loop4

squash 4.0 0 100% /snap/snapd/20290

sda

├─sda1

│

├─sda2

│ xfs f681192a-1cf2-4362-a74c-745374011700 1.8G 9% /boot

└─sda3

LVM2_m LVM2 JEduSv-mV9g-tzdJ-sYEc-6piR-6nAo-48Srdh

└─ubuntu--vg-root

xfs 19cd87ec-9741-4295-9762-e87fb4f472c8 37.9G 21% /var/lib/kubelet/pods/ce7174f8-ad8f-4f42-8e58-2abca8c91424/volume-subpaths/tigera-ca-bundle/calico-node/1

/

sdb

root@k8s-master71u:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

loop0 7:0 0 63.4M 1 loop /snap/core20/1974

loop1 7:1 0 63.5M 1 loop /snap/core20/2015

loop2 7:2 0 111.9M 1 loop /snap/lxd/24322

loop3 7:3 0 40.8M 1 loop /snap/snapd/20092

loop4 7:4 0 40.9M 1 loop /snap/snapd/20290

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 2G 0 part /boot

└─sda3 8:3 0 48G 0 part

└─ubuntu--vg-root

253:0 0 48G 0 lvm /var/lib/kubelet/pods/ce7174f8-ad8f-4f42-8e58-2abca8c91424/volume-subpaths/tigera-ca-bundle/calico-node/1

/

sdb 8:16 0 16G 0 disk

sr0 11:0 1 1024M 0 rom

ceph cluster佈屬

依序執行三個yaml

rook/deploy/examples/crds.yaml

rook/deploy/examples/common.yaml

rook/deploy/examples/operator.yaml

root@k8s-master71u:~# git clone --single-branch --branch v1.12.8 https://github.com/rook/rook.git

root@k8s-master71u:~# cd rook/deploy/examples

root@k8s-master71u:~/rook/deploy/examples# kubectl create -f crds.yaml

root@k8s-master71u:~/rook/deploy/examples# kubectl create -f common.yaml

root@k8s-master71u:~/rook/deploy/examples# kubectl create -f operator.yaml

# 確認 rook-ceph-operator pod啟動完成

root@k8s-master71u:~/rook/deploy/examples# kubectl get deployments.apps -n rook-ceph

NAME READY UP-TO-DATE AVAILABLE AGE

rook-ceph-operator 1/1 1 1 22s

root@k8s-master71u:~/rook/deploy/examples# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-58775c8bdf-vmdkn 1/1 Running 0 39s

執行cluster.yaml

cluster.yaml 分別調整

# 調度(使用節點親和性調度)

# 角色資源

# 磁碟調度

# 關閉磁碟自動全部調度

root@k8s-master71u:~/rook/deploy/examples# vim cluster.yaml

# 角色調度(使用節點親和性調度)

placement:

mon:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-mon

operator: In

values:

- enabled

tolerations:

- effect: NoSchedule

operator: Exists

mgr:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-mgr

operator: In

values:

- enabled

tolerations:

- effect: NoSchedule

operator: Exists

osd:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-osd

operator: In

values:

- enabled

tolerations:

- effect: NoSchedule

operator: Exists

prepareosd:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-osd

operator: In

values:

- enabled

tolerations:

- effect: NoSchedule

operator: Exists

# 角色資源

resources:

mon:

limits:

cpu: "1000m"

memory: "2048Mi"

requests:

cpu: "1000m"

memory: "2048Mi"

mgr:

limits:

cpu: "1000m"

memory: "1024Mi"

requests:

cpu: "1000m"

memory: "1024Mi"

osd:

limits:

cpu: "1000m"

memory: "2048Mi"

requests:

cpu: "1000m"

memory: "2048Mi"

# 磁碟調度

nodes:

- name: "k8s-master71u"

devices:

- name: "sdb"

config:

storeType: bluestore

journalSizeMB: "4096"

- name: "k8s-master72u"

devices:

- name: "sdb"

config:

storeType: bluestore

journalSizeMB: "4096"

- name: "k8s-master73u"

devices:

- name: "sdb"

config:

storeType: bluestore

journalSizeMB: "4096"

- name: "k8s-node75u"

devices:

- name: "sdb"

config:

storeType: bluestore

journalSizeMB: "4096"

- name: "k8s-node76u"

devices:

- name: "sdb"

config:

storeType: bluestore

journalSizeMB: "4096"

# 關閉磁碟自動全部調度

storage: # cluster level storage configuration and selection

#設置磁碟的參數,調整為false,方便後面訂製

useAllNodes: false

useAllDevices: false

主機打標籤

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master71u ceph-mon=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master72u ceph-mon=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master73u ceph-mon=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master71u ceph-mgr=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master72u ceph-mgr=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master73u ceph-mgr=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master71u ceph-osd=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master72u ceph-osd=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master73u ceph-osd=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-node75u ceph-osd=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-node76u ceph-osd=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl create -f cluster.yaml

cephcluster.ceph.rook.io/rook-ceph created

確認佈屬結果(Pending狀況發生)

全部節點重開機就可以

root@k8s-master71u:~/rook/deploy/examples# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-dfz59 2/2 Running 0 17s

csi-cephfsplugin-provisioner-668dfcf95b-dgs5l 5/5 Running 0 17s

csi-cephfsplugin-provisioner-668dfcf95b-wlz6r 5/5 Running 0 17s

csi-cephfsplugin-xqdvc 2/2 Running 0 17s

csi-rbdplugin-l294v 2/2 Running 0 17s

csi-rbdplugin-lts27 2/2 Running 0 17s

csi-rbdplugin-provisioner-5b78f67bbb-7gz4x 5/5 Running 0 17s

csi-rbdplugin-provisioner-5b78f67bbb-8w47k 5/5 Running 0 17s

rook-ceph-csi-detect-version-tg598 0/1 Completed 0 20s

rook-ceph-detect-version-z52cj 0/1 Completed 0 20s

rook-ceph-mon-a-6b8f546466-h5rp2 0/2 Pending 0 9s

rook-ceph-mon-a-canary-5554b85b75-wf78h 2/2 Running 0 12s

rook-ceph-mon-b-canary-7569748cf6-cr59z 2/2 Running 0 12s

rook-ceph-mon-c-canary-85d88b48d5-649pv 2/2 Running 0 12s

rook-ceph-operator-6bfb456b57-297f8 1/1 Running 0 31s

無法調度

root@k8s-master71u:~/rook/deploy/examples# kubectl describe pod rook-ceph-mon-a-6b8f546466-h5rp2 -n rook-ceph

Node-Selectors: kubernetes.io/hostname=k8s-master71u

Tolerations: :NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 81s default-scheduler 0/5 nodes are available: 1 node(s) didn't satisfy existing pods anti-affinity rules, 4 node(s) didn't match Pod's node affinity/selector. preemption: 0/5 nodes are available: 1 No preemption victims found for incoming pod, 4 Preemption is not helpful for scheduling..

root@k8s-master71u:~# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-bk8gt 2/2 Running 4 (5m36s ago) 18m

csi-cephfsplugin-provisioner-668dfcf95b-858rd 5/5 Running 10 (5m37s ago) 18m

csi-cephfsplugin-provisioner-668dfcf95b-n4wdx 5/5 Running 10 (5m36s ago) 18m

csi-cephfsplugin-rp5nh 2/2 Running 4 (5m37s ago) 18m

csi-rbdplugin-provisioner-5b78f67bbb-4vrtb 5/5 Running 10 (5m36s ago) 18m

csi-rbdplugin-provisioner-5b78f67bbb-m5789 5/5 Running 10 (5m36s ago) 18m

csi-rbdplugin-qfrtk 2/2 Running 4 (5m36s ago) 18m

csi-rbdplugin-z68hx 2/2 Running 4 (5m36s ago) 18m

rook-ceph-crashcollector-k8s-master71u-55fcdbd66c-k6pkj 1/1 Running 0 2m13s

rook-ceph-crashcollector-k8s-master72u-675646bf64-hnpw9 1/1 Running 0 2m12s

rook-ceph-crashcollector-k8s-master73u-84fdc469f7-6khkr 1/1 Running 0 2m42s

rook-ceph-crashcollector-k8s-node75u-bbc794dd9-5zbhs 1/1 Running 0 15s

rook-ceph-crashcollector-k8s-node76u-5fd59cd68b-b9pxq 1/1 Running 0 24s

rook-ceph-csi-detect-version-pqgj7 0/1 Completed 0 3m52s

rook-ceph-detect-version-rtgsq 0/1 Completed 0 13s

rook-ceph-mgr-a-767b9fc956-xm4ns 3/3 Running 0 2m49s

rook-ceph-mgr-b-8956fb9c-rvjff 3/3 Running 0 2m48s

rook-ceph-mon-a-769fd77b5b-trw4f 2/2 Running 0 9m7s

rook-ceph-mon-b-65cf769696-7dncd 2/2 Running 0 3m14s

rook-ceph-mon-c-58664986b-vqrzw 2/2 Running 0 3m2s

rook-ceph-operator-58775c8bdf-mfp6n 1/1 Running 2 (5m36s ago) 23m

rook-ceph-osd-0-55f6d9b-xrjb6 2/2 Running 0 2m13s

rook-ceph-osd-1-7db86764b-2fn48 2/2 Running 0 2m12s

rook-ceph-osd-2-84cd64ffcb-nxrkx 2/2 Running 0 2m10s

rook-ceph-osd-3-748c78f4dd-whsxk 2/2 Running 0 24s

rook-ceph-osd-4-65c79df6dd-2w7z9 1/2 Running 0 15s

rook-ceph-osd-prepare-k8s-master71u-shwfs 0/1 Completed 0 2m26s

rook-ceph-osd-prepare-k8s-master72u-z4vbt 0/1 Completed 0 2m26s

rook-ceph-osd-prepare-k8s-master73u-rgxtn 0/1 Completed 0 2m25s

rook-ceph-osd-prepare-k8s-node75u-sdm5n 0/1 Completed 0 2m24s

rook-ceph-osd-prepare-k8s-node76u-l8dlp 0/1 Completed 0 2m23s

執行toolbox.yaml

創建工具POD

root@k8s-master71u:~/rook/deploy/examples# kubectl create -f toolbox.yaml

deployment.apps/rook-ceph-tools created

可以看到ceph集群狀況

root@k8s-master71u:~/rook/deploy/examples# kubectl exec -it rook-ceph-tools-7cd4cd9c9c-kd8pf -n rook-ceph -- /bin/bash

bash-4.4$ ceph -s

cluster:

id: b5e028e3-725d-4a26-8a14-17fe4dead850

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 5m)

mgr: a(active, since 86s), standbys: b

osd: 5 osds: 5 up (since 2m), 5 in (since 3m)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 41 MiB used, 80 GiB / 80 GiB avail

pgs: 1 active+clean

bash-4.4$ ceph status

cluster:

id: b5e028e3-725d-4a26-8a14-17fe4dead850

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 6m)

mgr: a(active, since 116s), standbys: b

osd: 5 osds: 5 up (since 3m), 5 in (since 3m)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 41 MiB used, 80 GiB / 80 GiB avail

pgs: 1 active+clean

bash-4.4$ ceph osd status

ID HOST USED AVAIL WR OPS WR DATA RD OPS RD DATA STATE

0 k8s-master71u 8368k 15.9G 0 0 0 0 exists,up

1 k8s-master72u 8820k 15.9G 0 0 0 0 exists,up

2 k8s-master73u 8820k 15.9G 0 0 0 0 exists,up

3 k8s-node76u 8432k 15.9G 0 0 0 0 exists,up

4 k8s-node75u 7920k 15.9G 0 0 0 0 exists,up

bash-4.4$ ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

ssd 80 GiB 80 GiB 41 MiB 41 MiB 0.05

TOTAL 80 GiB 80 GiB 41 MiB 41 MiB 0.05

--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

.mgr 1 1 449 KiB 2 449 KiB 0 25 GiB

bash-4.4$ rados df

POOL_NAME USED OBJECTS CLONES COPIES MISSING_ON_PRIMARY UNFOUND DEGRADED RD_OPS RD WR_OPS WR USED COMPR UNDER COMPR

.mgr 449 KiB 2 0 6 0 0 0 288 494 KiB 153 1.3 MiB 0 B 0 B

total_objects 2

total_used 41 MiB

total_avail 80 GiB

total_space 80 GiB

主機直接查看ceph(ceph-common安裝)

# 安裝ceph client工具

root@k8s-master71u:~/rook/deploy/examples# apt update

root@k8s-master71u:~/rook/deploy/examples# apt install ceph-common

# 將連線設定,複製出來

root@k8s-master71u:~/rook/deploy/examples# kubectl exec -it rook-ceph-tools-7cd4cd9c9c-kd8pf -n rook-ceph -- /bin/bash

bash-4.4$ cat /etc/ceph/ceph.conf

[global]

mon_host = 10.245.146.85:6789,10.245.203.29:6789,10.245.216.126:6789

[client.admin]

keyring = /etc/ceph/keyring

bash-4.4$ cat /etc/ceph/keyring

[client.admin]

key = AQA8LmZlDA21GhAAvgMoEOkXur9olDYtGkF8kQ==

# 主機創建連線設定

root@k8s-master71u:~/rook/deploy/examples# vim /etc/ceph/ceph.conf

[global]

mon_host = 10.245.146.85:6789,10.245.203.29:6789,10.245.216.126:6789

[client.admin]

keyring = /etc/ceph/keyring

root@k8s-master71u:~# vim /etc/ceph/keyring

[client.admin]

key = AQA8LmZlDA21GhAAvgMoEOkXur9olDYtGkF8kQ==

# 主機直接連接ceph管理

root@k8s-master71u:~# ceph -s

cluster:

id: b5e028e3-725d-4a26-8a14-17fe4dead850

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 2m)

mgr: a(active, since 2m), standbys: b

osd: 5 osds: 5 up (since 2m), 5 in (since 10m)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 39 MiB used, 80 GiB / 80 GiB avail

pgs: 1 active+clean

cephfs佈屬

主機打標籤

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master71u ceph-mds=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master72u ceph-mds=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master73u ceph-mds=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-node75u ceph-mds=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-node76u ceph-mds=enabled

執行filesystem.yaml

filesystem.yaml 分別調整

# activeCount: 2 (2 active、2 stanby)

# 調度(使用節點親和性調度) -> nodeAffinity

# POD反親和性 -> podAntiAffinity

防止同一台主機上,有兩個mds

root@k8s-master71u:~/rook/deploy/examples# vim filesystem.yaml

activeCount: 2

placement:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-mds

operator: In

values:

- enabled

tolerations:

- effect: NoSchedule

operator: Exists

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- rook-ceph-mds

topologyKey: kubernetes.io/hostname

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- rook-ceph-mds

topologyKey: topology.kubernetes.io/zone

root@k8s-master71u:~/rook/deploy/examples# kubectl create -f filesystem.yaml

確認佈屬結果

root@k8s-master71u:~/rook/deploy/examples# kubectl get pod -n rook-ceph -o wide | grep -i rook-ceph-mds

rook-ceph-mds-myfs-a-656c99cf6b-mp7bq 2/2 Running 0 2m37s 10.244.96.33 k8s-master73u <none> <none>

rook-ceph-mds-myfs-b-65bf4fdbcd-j9gzt 2/2 Running 0 2m36s 10.244.14.186 k8s-node75u <none> <none>

rook-ceph-mds-myfs-c-b7bd7658-tt845 2/2 Running 0 2m34s 10.244.255.213 k8s-node76u <none> <none>

rook-ceph-mds-myfs-d-dff64fb98-zb4rv 2/2 Running 0 2m31s 10.244.133.17 k8s-master71u <none> <none>

root@k8s-master71u:~/rook/deploy/examples# ceph -s

cluster:

id: b5e028e3-725d-4a26-8a14-17fe4dead850

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 11m)

mgr: a(active, since 12m), standbys: b

mds: 2/2 daemons up, 2 hot standby

osd: 5 osds: 5 up (since 12m), 5 in (since 19m)

data:

volumes: 1/1 healthy

pools: 3 pools, 49 pgs

objects: 45 objects, 452 KiB

usage: 46 MiB used, 80 GiB / 80 GiB avail

pgs: 2.041% pgs not active

48 active+clean

1 peering

io:

client: 1.5 KiB/s rd, 3 op/s rd, 0 op/s wr

recovery: 95 B/s, 0 objects/s

創建storageclass(storageclass.yaml)

root@k8s-master71u:~/rook/deploy/examples/csi/cephfs# kubectl create -f storageclass.yaml

root@k8s-master71u:~/rook/deploy/examples/csi/cephfs# kubectl get storageclasses.storage.k8s.io

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

rook-cephfs rook-ceph.cephfs.csi.ceph.com Delete Immediate true 8s

root@k8s-master71u:~/rook/deploy/examples/csi/cephfs# ceph fs ls

name: myfs, metadata pool: myfs-metadata, data pools: [myfs-replicated ]

測試storageClassName(rook-cephfs)

申請PVC,指定storageClassName: “rook-cephfs”

實現自動提供掛載空間

root@k8s-master71u:~# cat sc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-sc

spec:

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html

volumes:

- name: wwwroot

persistentVolumeClaim:

claimName: web-sc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: web-sc

spec:

storageClassName: "rook-cephfs"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

root@k8s-master71u:~# kubectl create -f sc.yaml

root@k8s-master71u:~# kubectl get pod

NAME READY STATUS RESTARTS AGE

test-nginx 1/1 Running 5 (33m ago) 69d

web-sc-7b6c54fbb9-8q254 1/1 Running 0 46s

web-sc-7b6c54fbb9-hkw2z 1/1 Running 0 46s

web-sc-7b6c54fbb9-m4qlf 1/1 Running 0 46s

root@k8s-master71u:~# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-ea9ac011-5fd5-4436-ad61-468e9ce95239 5Gi RWX Delete Bound default/web-sc rook-cephfs 51s

root@k8s-master71u:~# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

web-sc Bound pvc-ea9ac011-5fd5-4436-ad61-468e9ce95239 5Gi RWX rook-cephfs 54s

測試兩個POD確實掛載了相同位置

root@k8s-master71u:~# kubectl exec -ti web-sc-7b6c54fbb9-8q254 -- /bin/bash

root@web-sc-7b6c54fbb9-8q254:/#

root@web-sc-7b6c54fbb9-8q254:/# df -h

Filesystem Size Used Avail Use% Mounted on

10.245.146.85:6789,10.245.203.29:6789,10.245.216.126:6789:/volumes/csi/csi-vol-d7642fd0-5da4-4599-b69e-bc0c1b948ded/dccf324f-8ad6-40f3-9e99-6186a32d3faf 5.0G 0 5.0G 0% /usr/share/nginx/html

root@web-sc-7b6c54fbb9-8q254:/# cd /usr/share/nginx/html/

root@web-sc-7b6c54fbb9-8q254:/usr/share/nginx/html# touch 123

root@web-sc-7b6c54fbb9-8q254:/usr/share/nginx/html# ls

123

root@web-sc-7b6c54fbb9-8q254:/usr/share/nginx/html# exit

root@k8s-master71u:~# kubectl exec -ti web-sc-7b6c54fbb9-hkw2z -- /bin/bash

root@web-sc-7b6c54fbb9-hkw2z:/# df -h

Filesystem Size Used Avail Use% Mounted on

10.245.146.85:6789,10.245.203.29:6789,10.245.216.126:6789:/volumes/csi/csi-vol-d7642fd0-5da4-4599-b69e-bc0c1b948ded/dccf324f-8ad6-40f3-9e99-6186a32d3faf 5.0G 0 5.0G 0% /usr/share/nginx/html

root@web-sc-7b6c54fbb9-hkw2z:/# cd /usr/share/nginx/html/

root@web-sc-7b6c54fbb9-hkw2z:/usr/share/nginx/html# ls

123

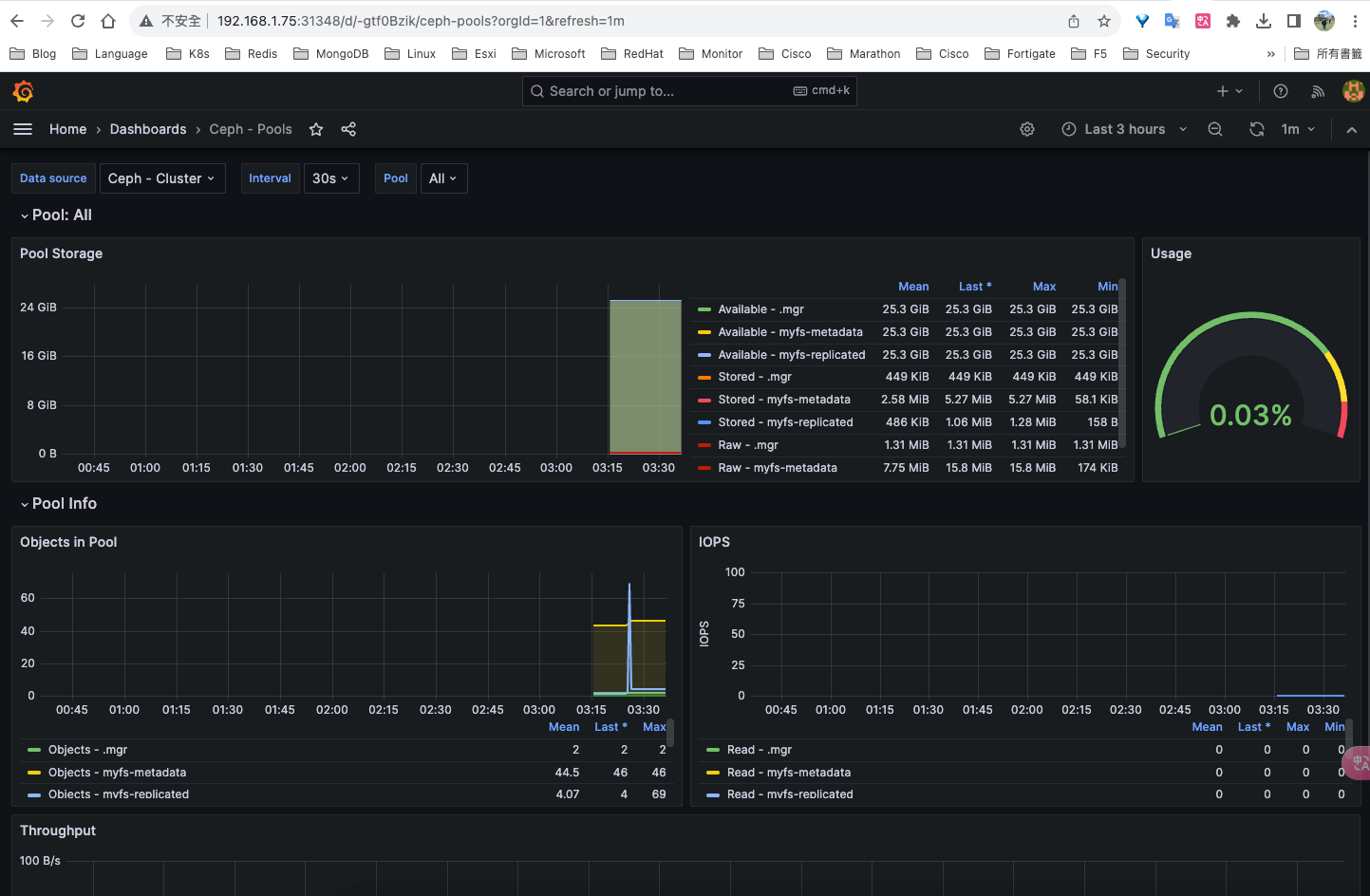

Ceph管理平台

root@k8s-master71u:~/rook/deploy/examples# kubectl get services -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-mgr ClusterIP 10.245.33.129 <none> 9283/TCP 34m

rook-ceph-mgr-dashboard ClusterIP 10.245.17.131 <none> 7000/TCP 34m

rook-ceph-mon-a ClusterIP 10.245.146.85 <none> 6789/TCP,3300/TCP 41m

rook-ceph-mon-b ClusterIP 10.245.203.29 <none> 6789/TCP,3300/TCP 35m

rook-ceph-mon-c ClusterIP 10.245.216.126 <none> 6789/TCP,3300/TCP 35m

root@k8s-master71u:~/rook/deploy/examples# kubectl create -f dashboard-external-https.yaml

root@k8s-master71u:~/rook/deploy/examples# kubectl get services -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-mgr ClusterIP 10.245.33.129 <none> 9283/TCP 36m

rook-ceph-mgr-dashboard ClusterIP 10.245.17.131 <none> 7000/TCP 36m

rook-ceph-mgr-dashboard-external-https NodePort 10.245.106.246 <none> 8443:30901/TCP 10s

rook-ceph-mon-a ClusterIP 10.245.146.85 <none> 6789/TCP,3300/TCP 43m

rook-ceph-mon-b ClusterIP 10.245.203.29 <none> 6789/TCP,3300/TCP 37m

rook-ceph-mon-c ClusterIP 10.245.216.126 <none> 6789/TCP,3300/TCP 37m

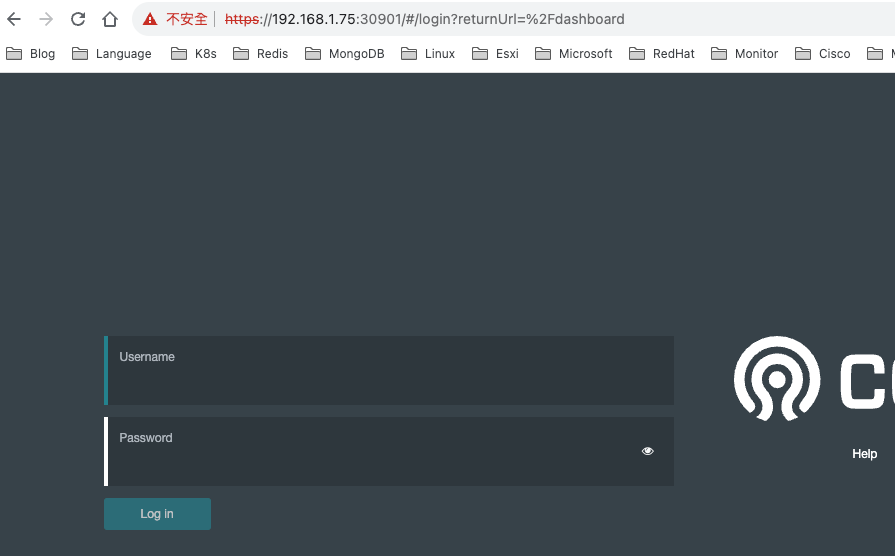

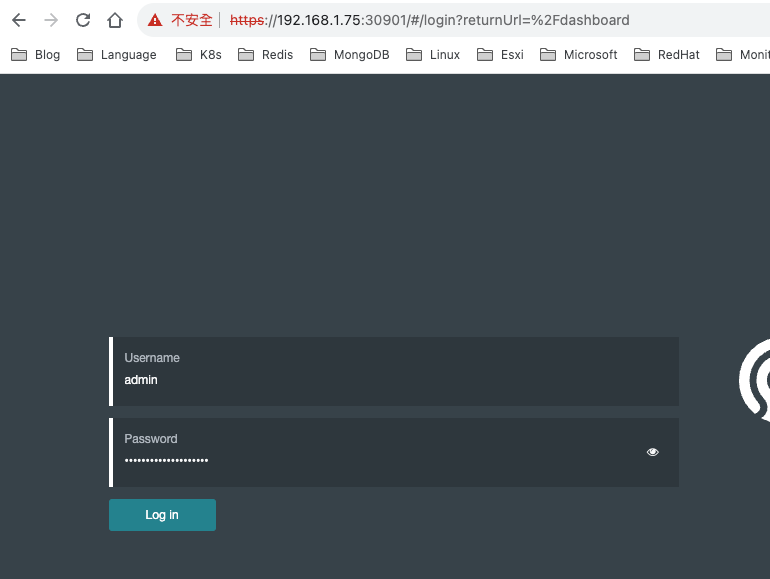

https://192.168.1.75:30901/#/login?returnUrl=%2Fdashboard

獲取密碼

root@k8s-master71u:~/rook/deploy/examples# kubectl get secrets -n rook-ceph rook-ceph-dashboard-password -o yaml

apiVersion: v1

data:

password: Y0w5bG1Vb0QlTCpQViM2RFB3U3k=

root@k8s-master71u:~/rook/deploy/examples# echo "Y0w5bG1Vb0QlTCpQViM2RFB3U3k=" | base64 -d

cL9lmUoD%L*PV#6DPwSy

更改密碼

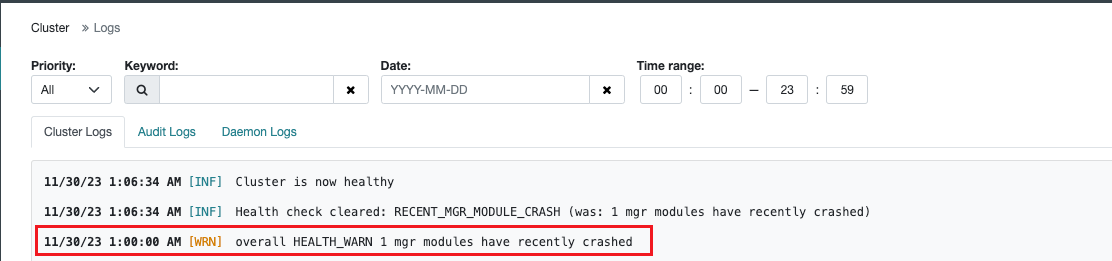

一直報這個錯誤,導致集群檢查不健康

overall HEALTH_WARN 1 mgr modules have recently crashed

查看報錯

root@k8s-master71u:~/rook/deploy/examples# ceph crash ls

ID ENTITY NEW

2023-11-28T19:02:15.741903Z_cff323ca-e2d5-415c-b5e6-e518a4a2e892 mgr.a *

root@k8s-master71u:~/rook/deploy/examples# ceph crash info 2023-11-28T19:02:15.741903Z_cff323ca-e2d5-415c-b5e6-e518a4a2e892

{

"backtrace": [

" File \"/usr/share/ceph/mgr/nfs/module.py\", line 169, in cluster_ls\n return available_clusters(self)",

" File \"/usr/share/ceph/mgr/nfs/utils.py\", line 38, in available_clusters\n completion = mgr.describe_service(service_type='nfs')",

" File \"/usr/share/ceph/mgr/orchestrator/_interface.py\", line 1488, in inner\n completion = self._oremote(method_name, args, kwargs)",

" File \"/usr/share/ceph/mgr/orchestrator/_interface.py\", line 1555, in _oremote\n raise NoOrchestrator()",

"orchestrator._interface.NoOrchestrator: No orchestrator configured (try `ceph orch set backend`)"

],

"ceph_version": "17.2.6",

"crash_id": "2023-11-28T19:02:15.741903Z_cff323ca-e2d5-415c-b5e6-e518a4a2e892",

"entity_name": "mgr.a",

"mgr_module": "nfs",

"mgr_module_caller": "ActivePyModule::dispatch_remote cluster_ls",

"mgr_python_exception": "NoOrchestrator",

"os_id": "centos",

"os_name": "CentOS Stream",

"os_version": "8",

"os_version_id": "8",

"process_name": "ceph-mgr",

"stack_sig": "b01db59d356dd52f69bfb0b128a216e7606f54a60674c3c82711c23cf64832ce",

"timestamp": "2023-11-28T19:02:15.741903Z",

"utsname_hostname": "rook-ceph-mgr-a-767b9fc956-xm4ns",

"utsname_machine": "x86_64",

"utsname_release": "5.15.0-84-generic",

"utsname_sysname": "Linux",

"utsname_version": "#93-Ubuntu SMP Tue Sep 5 17:16:10 UTC 2023"

}

需啟用mgr rook的modules

參考網址: https://github.com/rook/rook/issues/11316

# 預設rook沒有啟用

root@k8s-master71u:~/rook/deploy/examples# ceph mgr module ls

root@k8s-master71u:~/rook/deploy/examples# ceph mgr module enable rook

root@k8s-master71u:~/rook/deploy/examples# ceph mgr module ls

MODULE

balancer on (always on)

crash on (always on)

devicehealth on (always on)

orchestrator on (always on)

pg_autoscaler on (always on)

progress on (always on)

rbd_support on (always on)

status on (always on)

telemetry on (always on)

volumes on (always on)

dashboard on

iostat on

nfs on

prometheus on

restful on

rook on

root@k8s-master71u:~/rook/deploy/examples# ceph crash archive 2023-11-28T19:02:15.741903Z_cff323ca-e2d5-415c-b5e6-e518a4a2e892

這樣集群檢查就會顯示健康

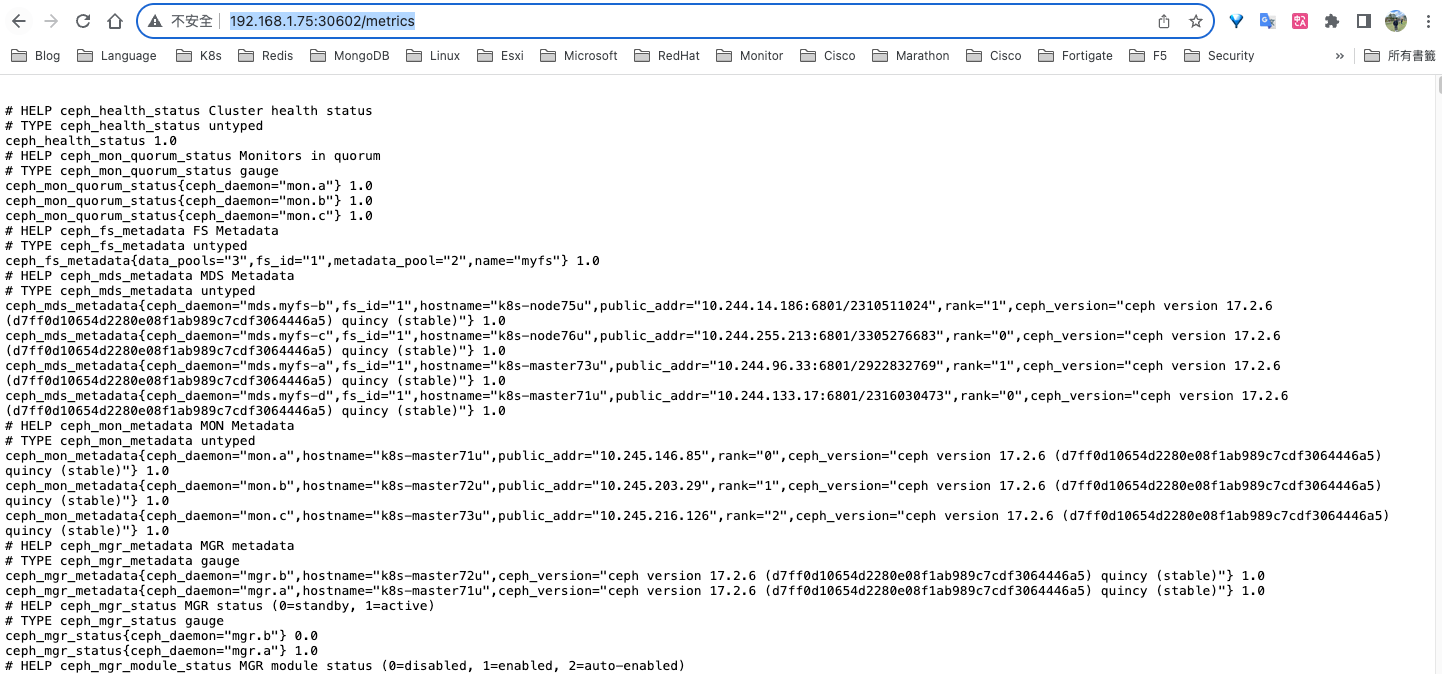

查看mgr暴露的metric監控指標

# 服務改成 NodePort

root@k8s-master71u:~/rook/deploy/examples/monitoring# kubectl edit services rook-ceph-mgr -n rook-ceph

type: NodePort

root@k8s-master71u:~/rook/deploy/examples/monitoring# kubectl get svc -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

rook-ceph-mgr NodePort 10.245.33.129 <none> 9283:30602/TCP 48m

# 可以看到監控指標

root@k8s-master71u:~/rook/deploy/examples/monitoring# curl http://10.245.33.129:9283/metrics

# HELP ceph_purge_queue_pq_item_in_journal Purge item left in journal

# TYPE ceph_purge_queue_pq_item_in_journal gauge

ceph_purge_queue_pq_item_in_journal{ceph_daemon="mds.myfs-d"} 0.0

ceph_purge_queue_pq_item_in_journal{ceph_daemon="mds.myfs-a"} 0.0

ceph_purge_queue_pq_item_in_journal{ceph_daemon="mds.myfs-b"} 0.0

ceph_purge_queue_pq_item_in_journal{ceph_daemon="mds.myfs-c"} 0.0

http://192.168.1.75:30602/metrics

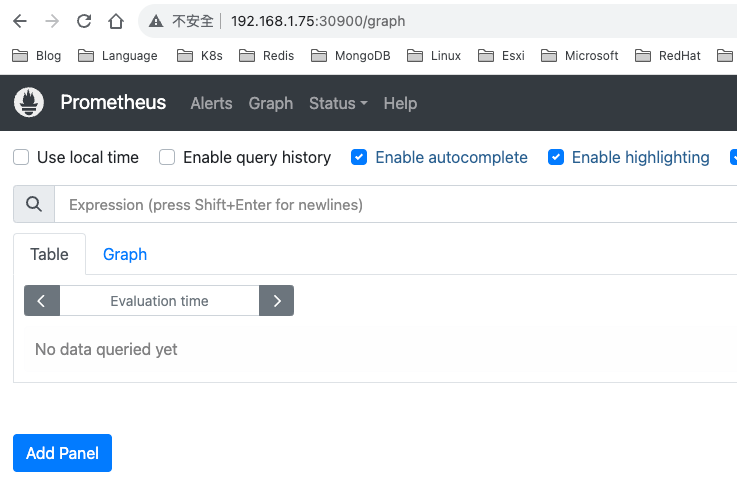

安裝Prometheus收集指標

安裝prometheus-operator

root@k8s-master71u:~/rook/deploy/examples/monitoring# wget https://raw.githubusercontent.com/coreos/prometheus-operator/v0.70.0/bundle.yaml

root@k8s-master71u:~/rook/deploy/examples/monitoring# kubectl create -f bundle.yaml

root@k8s-master71u:~/rook/deploy/examples/monitoring# kubectl get pod

NAME READY STATUS RESTARTS AGE

prometheus-operator-754cf6c978-wgn4w 1/1 Running 0 23s

prometheus-operator-847b56864c-6gp95 1/1 Running 0 5m55s

安裝prometheus server

root@k8s-master71u:~/rook/deploy/examples/monitoring# kubectl create -f prometheus.yaml

root@k8s-master71u:~/rook/deploy/examples/monitoring# kubectl create -f prometheus-service.yaml

root@k8s-master71u:~/rook/deploy/examples/monitoring# kubectl create -f service-monitor.yaml

root@k8s-master71u:~/rook/deploy/examples/monitoring# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

prometheus-rook-prometheus-0 2/2 Running 0 63s

root@k8s-master71u:~/rook/deploy/examples/monitoring# kubectl get services -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

prometheus-operated ClusterIP None <none> 9090/TCP 88s

rook-ceph-mgr NodePort 10.245.33.129 <none> 9283:30602/TCP 53m

rook-ceph-mgr-dashboard ClusterIP 10.245.17.131 <none> 7000/TCP 53m

rook-ceph-mgr-dashboard-external-https NodePort 10.245.106.246 <none> 8443:30901/TCP 17m

rook-ceph-mon-a ClusterIP 10.245.146.85 <none> 6789/TCP,3300/TCP 60m

rook-ceph-mon-b ClusterIP 10.245.203.29 <none> 6789/TCP,3300/TCP 54m

rook-ceph-mon-c ClusterIP 10.245.216.126 <none> 6789/TCP,3300/TCP 54m

rook-prometheus NodePort 10.245.174.189 <none> 9090:30900/TCP 77s

http://192.168.1.75:30900/graph

安裝Grafana展示圖表

root@k8s-master71u:~/rook/deploy/examples/monitoring# vim grafana-all.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: grafana

namespace: rook-ceph

spec:

accessModes:

- ReadWriteOnce

storageClassName: rook-cephfs

resources:

requests:

storage: 100Gi

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana

namespace: rook-ceph

spec:

selector:

matchLabels:

app: grafana

template:

metadata:

labels:

app: grafana

spec:

volumes:

- name: storage

persistentVolumeClaim:

claimName: grafana

securityContext:

runAsUser: 0

containers:

- name: grafana

image: grafana/grafana:9.5.14

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3000

name: grafana

env:

- name: GF_SECURITY_ADMIN_USER

value: admin

- name: GF_SECURITY_ADMIN_PASSWORD

value: admin

readinessProbe:

failureThreshold: 10

httpGet:

path: /api/health

port: 3000

scheme: HTTP

initialDelaySeconds: 60

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 30

livenessProbe:

failureThreshold: 3

httpGet:

path: /api/health

port: 3000

scheme: HTTP

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

resources:

limits:

cpu: 150m

memory: 512Mi

requests:

cpu: 150m

memory: 512Mi

volumeMounts:

- mountPath: /var/lib/grafana

name: storage

---

apiVersion: v1

kind: Service

metadata:

name: grafana

namespace: rook-ceph

spec:

type: NodePort

ports:

- port: 3000

selector:

app: grafana

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: grafana

namespace: rook-ceph

spec:

ingressClassName: nginx

rules:

- host: grafana.jimmyhome.tw

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: grafana

port:

number: 3000

root@k8s-master71u:~/rook/deploy/examples/monitoring# kubectl create -f grafana-all.yaml

root@k8s-master71u:~/rook/deploy/examples/monitoring# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

grafana-654886fbff-wzx9n 0/1 Running 0 42s

root@k8s-master71u:~/rook/deploy/examples/monitoring# kubectl get svc,pvc -n rook-ceph

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/grafana NodePort 10.245.92.95 <none> 3000:31348/TCP 68s

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/grafana Bound pvc-75e0cc6e-5130-4ab6-b447-0d19d95880f3 200Gi RWO rook-cephfs 69s

http://192.168.1.75:31348/login

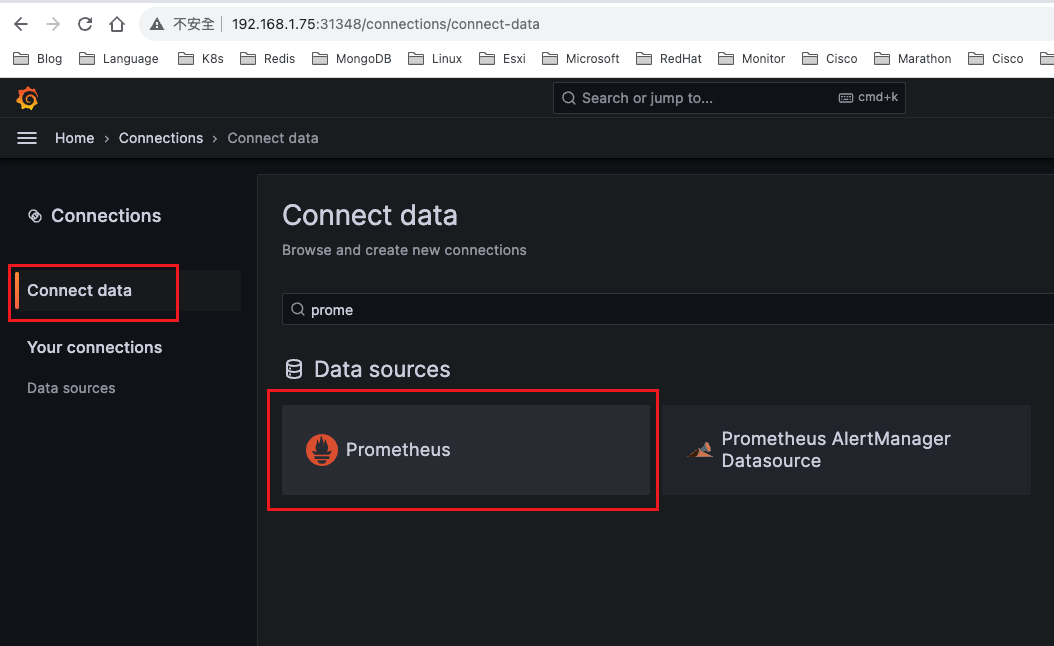

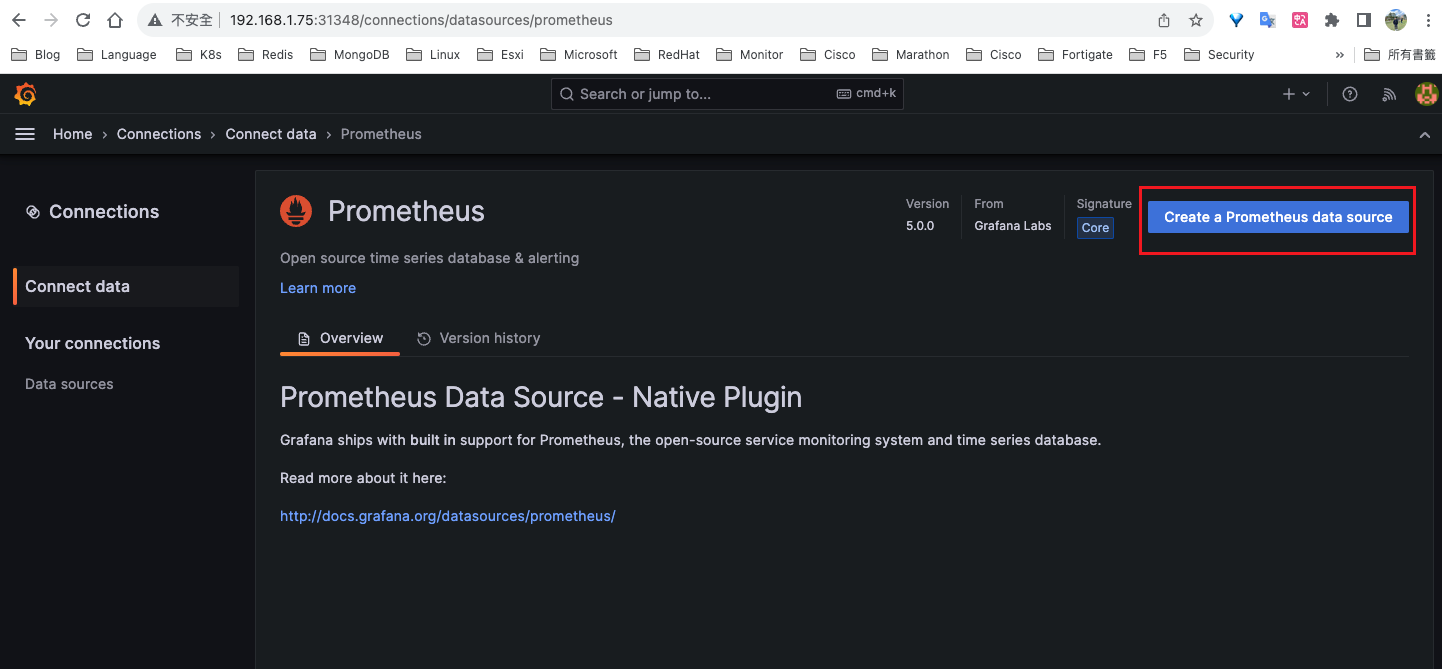

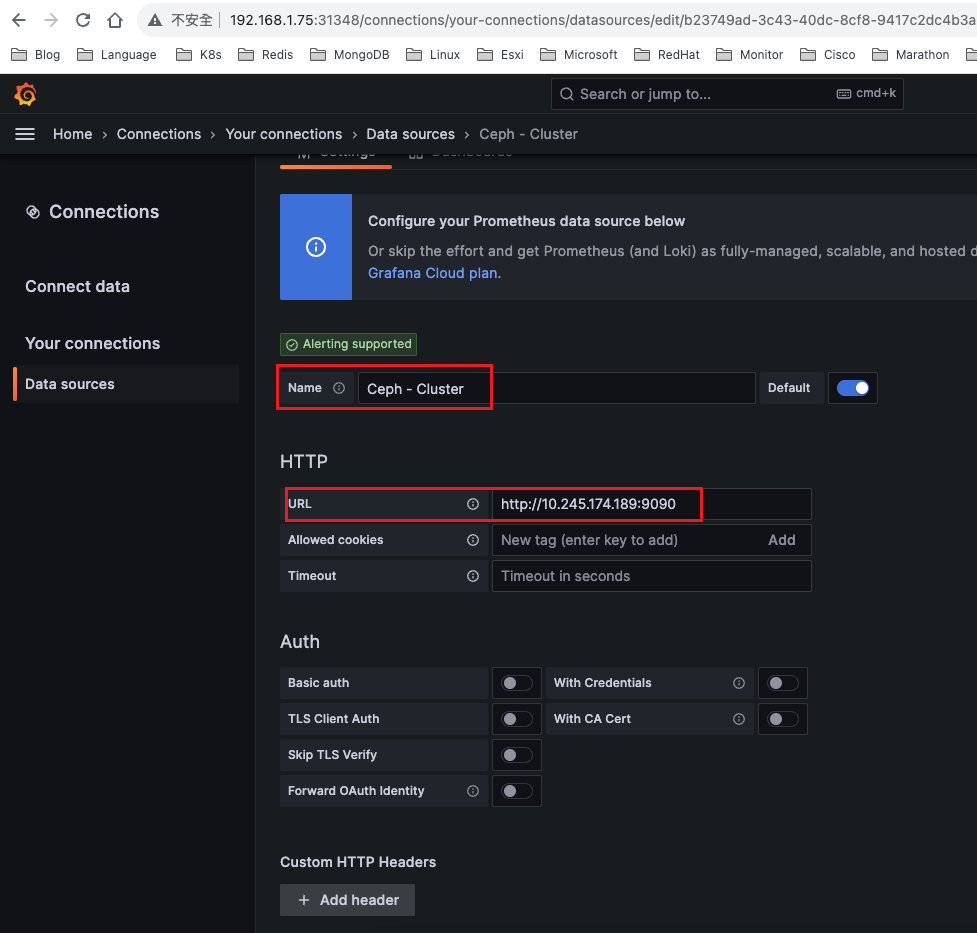

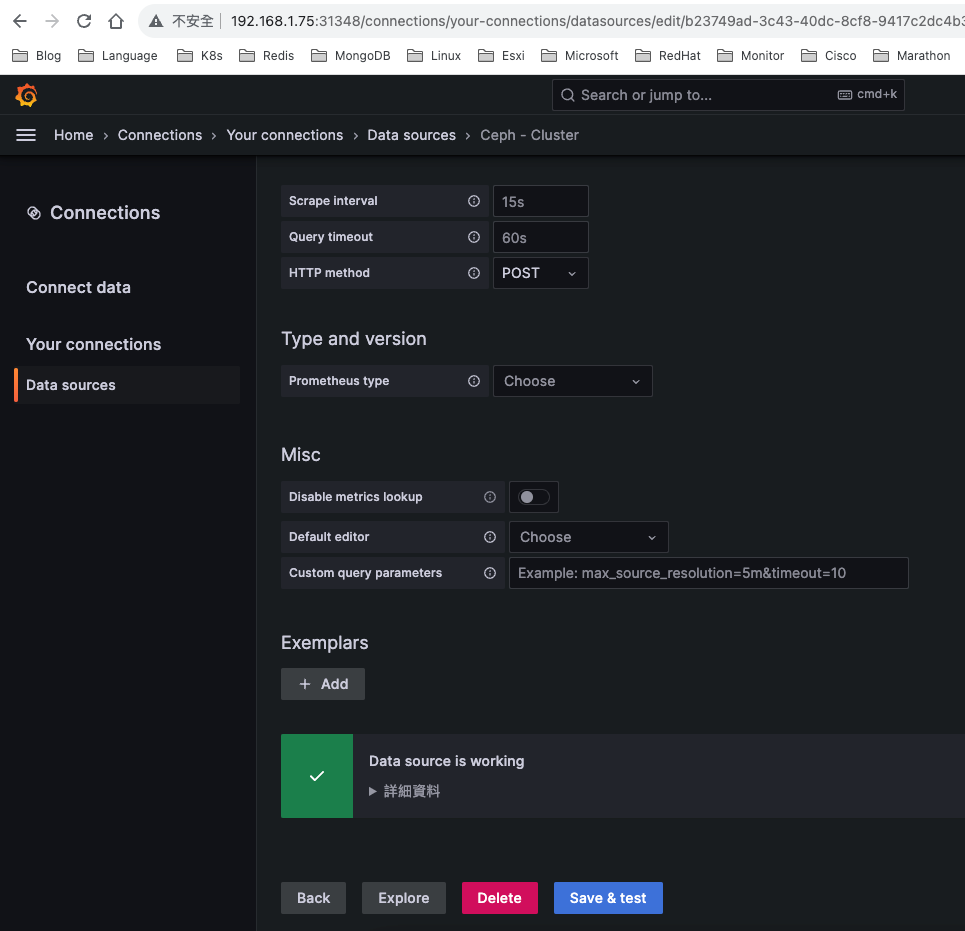

填寫Prometheus service ip

連線成功

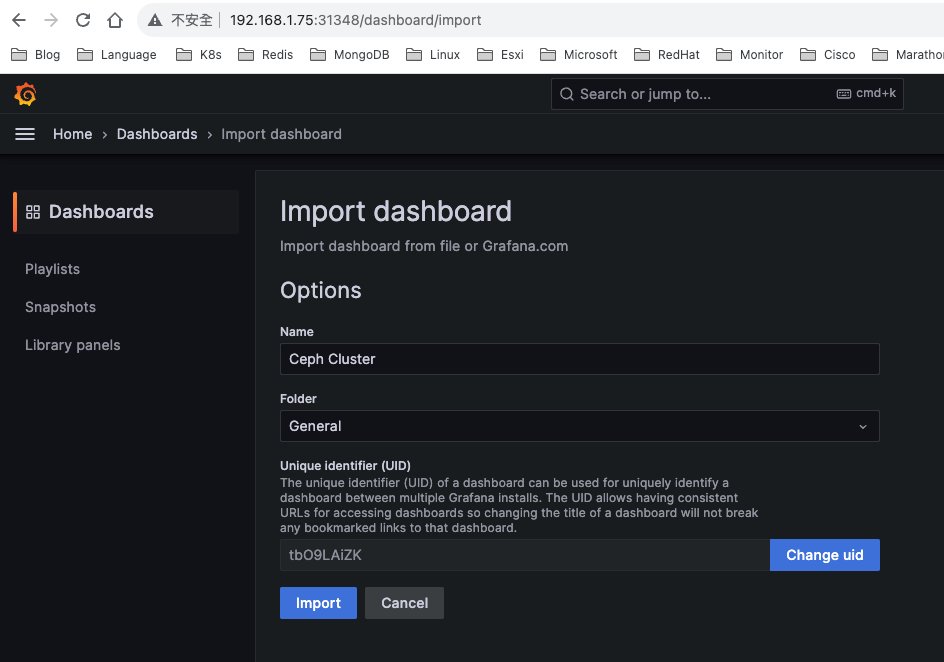

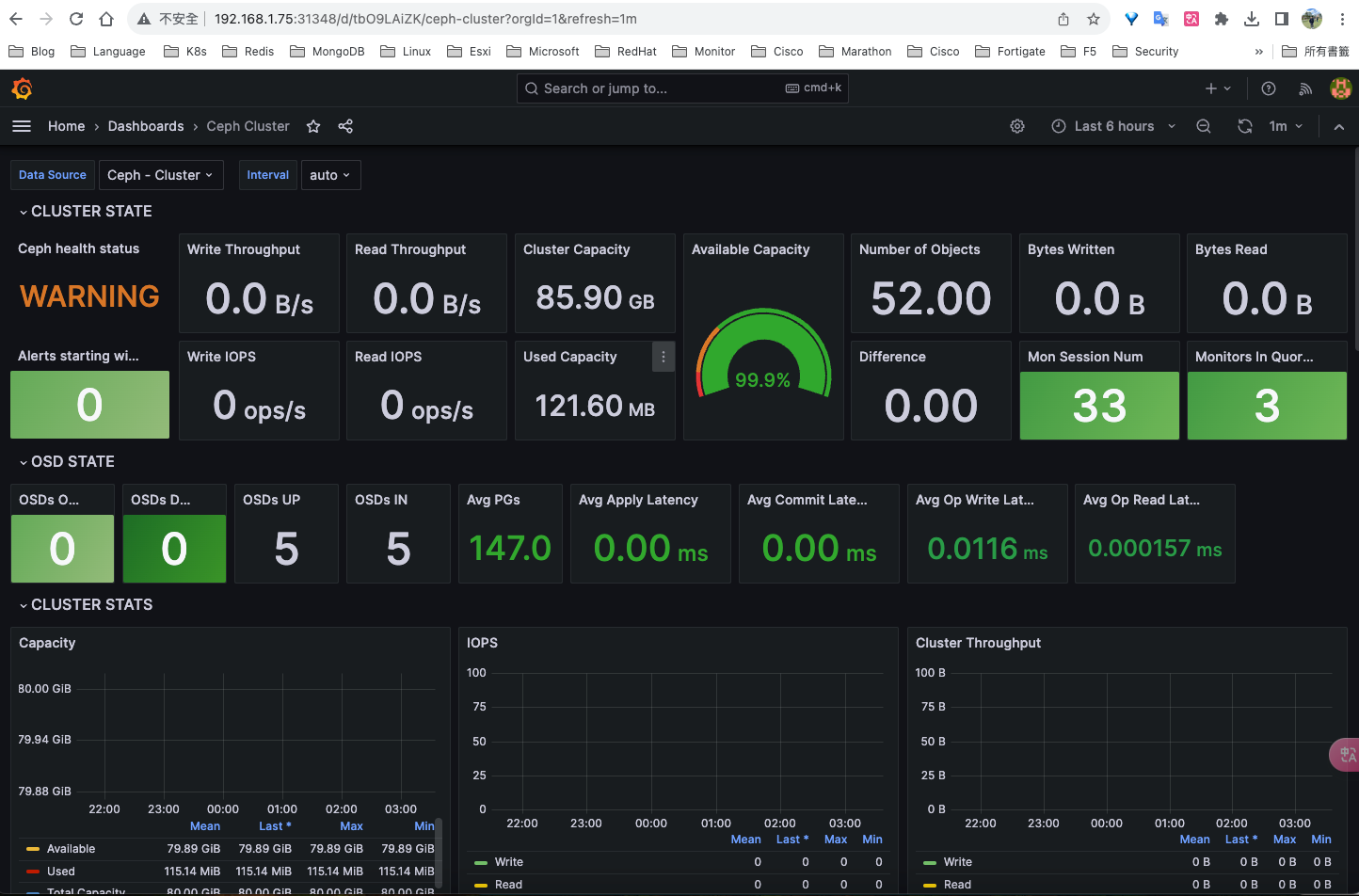

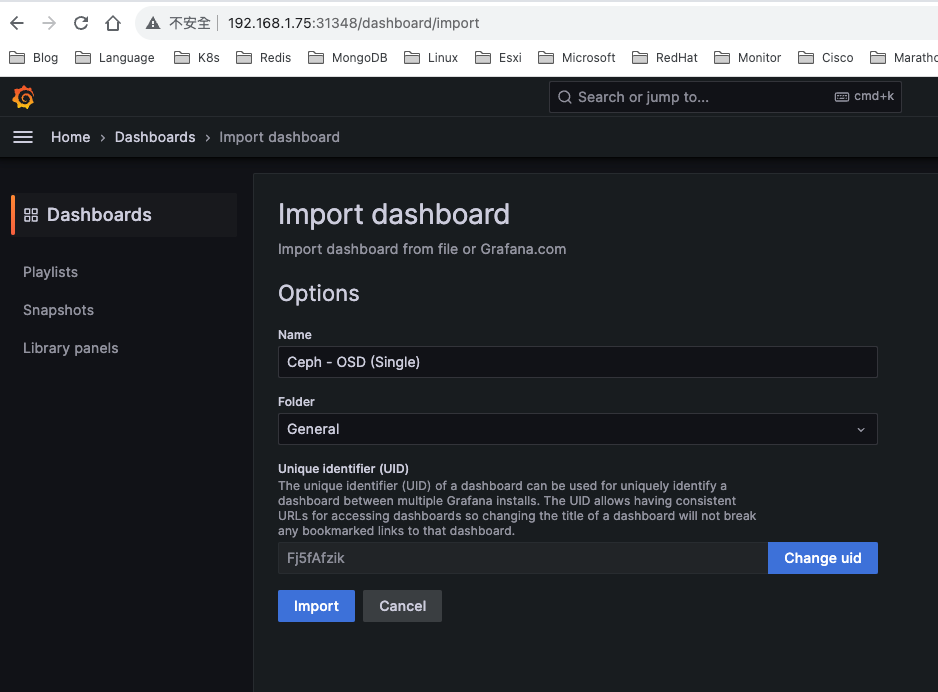

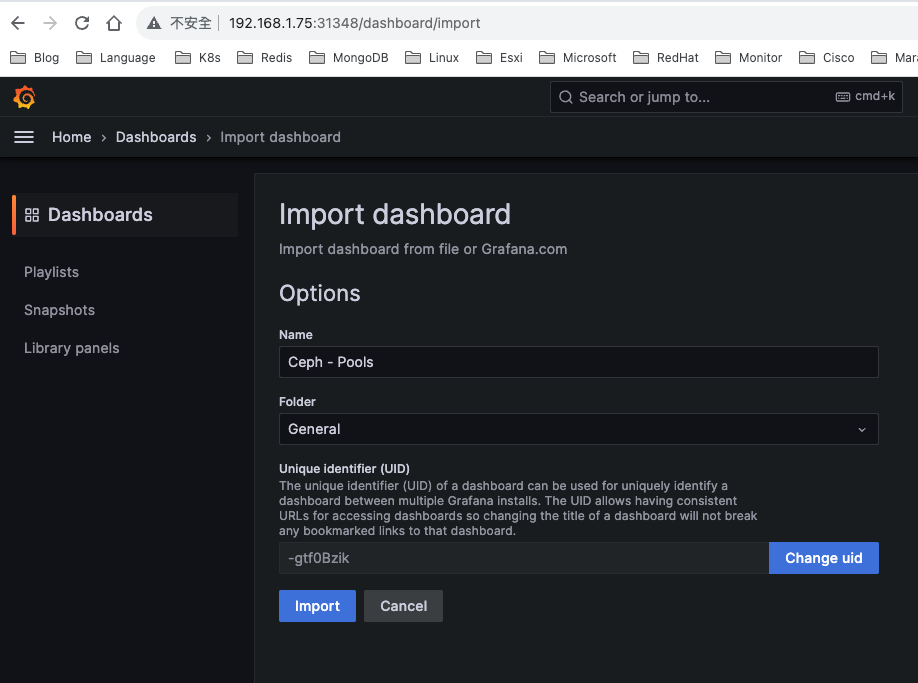

Dashboard導入

https://grafana.com/grafana/dashboards/2842-ceph-cluster/ https://grafana.com/grafana/dashboards/5336-ceph-osd-single/ https://grafana.com/grafana/dashboards/5342-ceph-pools/