目錄

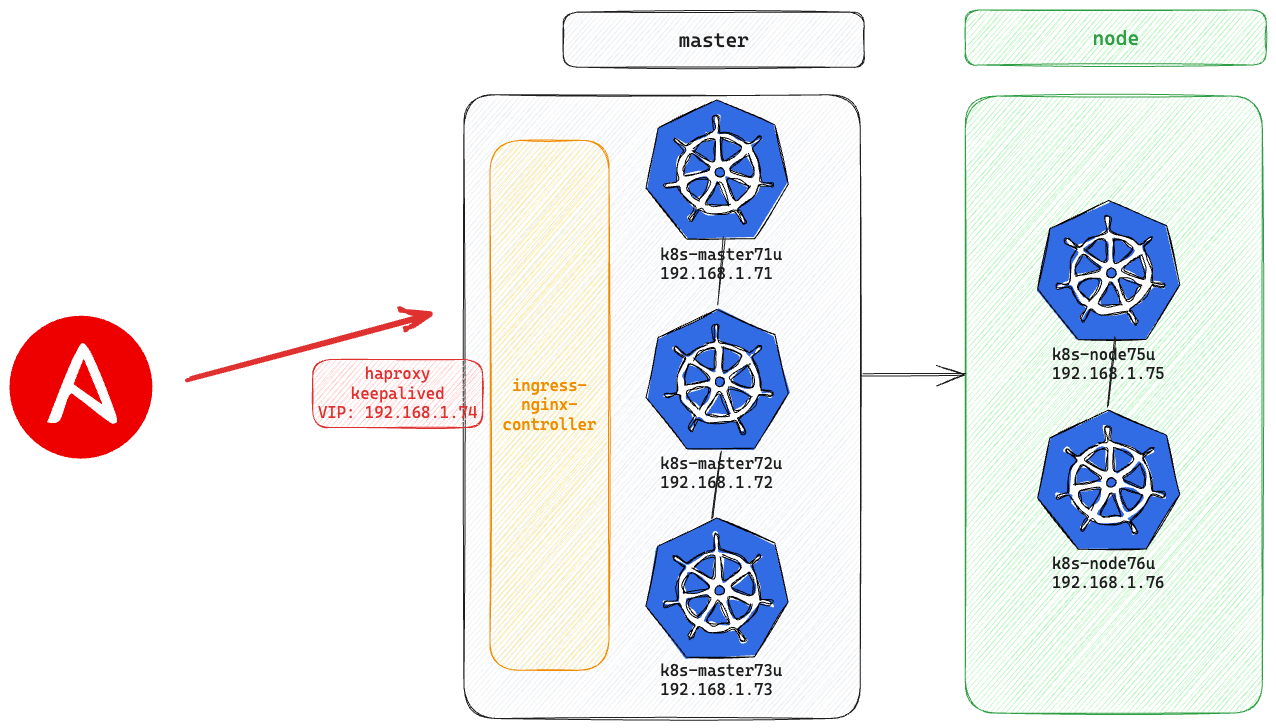

架構圖

集群自動化建置

準備

https://kubespray.io/#/docs/ansible?id=installing-ansible

使用python3創建虛擬目錄,安裝依賴包

[root@ansible ~]# python3 --version

Python 3.9.18

[root@ansible ~]# git clone https://github.com/kubernetes-sigs/kubespray.git

[root@ansible ~]# VENVDIR=kubespray-venv

[root@ansible ~]# KUBESPRAYDIR=kubespray

[root@ansible ~]# python3 -m venv $VENVDIR

[root@ansible ~]# source $VENVDIR/bin/activate

(kubespray-venv) [root@ansible ~]# cd $KUBESPRAYDIR

(kubespray-venv) [root@ansible kubespray]#

(kubespray-venv) [root@ansible kubespray]# pip install -U -r requirements.txt

Collecting ansible==8.5.0

Downloading ansible-8.5.0-py3-none-any.whl (47.5 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 47.5/47.5 MB 4.0 MB/s eta 0:00:00

Collecting cryptography==41.0.4

Downloading cryptography-41.0.4-cp37-abi3-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (4.4 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 4.4/4.4 MB 9.2 MB/s eta 0:00:00

Collecting jinja2==3.1.2

Downloading Jinja2-3.1.2-py3-none-any.whl (133 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 133.1/133.1 kB 9.1 MB/s eta 0:00:00

Collecting jmespath==1.0.1

Downloading jmespath-1.0.1-py3-none-any.whl (20 kB)

Collecting MarkupSafe==2.1.3

Downloading MarkupSafe-2.1.3-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (25 kB)

Collecting netaddr==0.9.0

Downloading netaddr-0.9.0-py3-none-any.whl (2.2 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.2/2.2 MB 9.2 MB/s eta 0:00:00

Collecting pbr==5.11.1

Downloading pbr-5.11.1-py2.py3-none-any.whl (112 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 112.7/112.7 kB 8.2 MB/s eta 0:00:00

Collecting ruamel.yaml==0.17.35

Downloading ruamel.yaml-0.17.35-py3-none-any.whl (112 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 112.9/112.9 kB 9.4 MB/s eta 0:00:00

Collecting ruamel.yaml.clib==0.2.8

Downloading ruamel.yaml.clib-0.2.8-cp39-cp39-manylinux_2_5_x86_64.manylinux1_x86_64.whl (562 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 562.1/562.1 kB 10.4 MB/s eta 0:00:00

Collecting ansible-core~=2.15.5

Downloading ansible_core-2.15.6-py3-none-any.whl (2.2 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 2.2/2.2 MB 9.3 MB/s eta 0:00:00

Collecting cffi>=1.12

Downloading cffi-1.16.0-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (443 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 443.4/443.4 kB 9.7 MB/s eta 0:00:00

Collecting importlib-resources<5.1,>=5.0

Downloading importlib_resources-5.0.7-py3-none-any.whl (24 kB)

Collecting PyYAML>=5.1

Downloading PyYAML-6.0.1-cp39-cp39-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (738 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 738.9/738.9 kB 8.6 MB/s eta 0:00:00

Collecting packaging

Downloading packaging-23.2-py3-none-any.whl (53 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 53.0/53.0 kB 7.5 MB/s eta 0:00:00

Collecting resolvelib<1.1.0,>=0.5.3

Downloading resolvelib-1.0.1-py2.py3-none-any.whl (17 kB)

Collecting pycparser

Downloading pycparser-2.21-py2.py3-none-any.whl (118 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 118.7/118.7 kB 8.6 MB/s eta 0:00:00

Installing collected packages: resolvelib, netaddr, ruamel.yaml.clib, PyYAML, pycparser, pbr, packaging, MarkupSafe, jmespath, importlib-resources, ruamel.yaml, jinja2, cffi, cryptography, ansible-core, ansible

Successfully installed MarkupSafe-2.1.3 PyYAML-6.0.1 ansible-8.5.0 ansible-core-2.15.6 cffi-1.16.0 cryptography-41.0.4 importlib-resources-5.0.7 jinja2-3.1.2 jmespath-1.0.1 netaddr-0.9.0 packaging-23.2 pbr-5.11.1 pycparser-2.21 resolvelib-1.0.1 ruamel.yaml-0.17.35 ruamel.yaml.clib-0.2.8

[notice] A new release of pip is available: 23.0.1 -> 23.3.1

[notice] To update, run: pip install --upgrade pip

(kubespray-venv) [root@ansible kubespray]#

生成hosts.yaml

https://kubespray.io/#/?id=deploy-a-production-ready-kubernetes-cluster

(kubespray-venv) [root@ansible kubespray]# cp -rfp inventory/sample inventory/mycluster

(kubespray-venv) [root@ansible kubespray]# declare -a IPS=(192.168.1.71 192.168.1.72 192.168.1.73 192.168.1.75 192.168.1.76)

(kubespray-venv) [root@ansible kubespray]# CONFIG_FILE=inventory/mycluster/hosts.yaml python3 contrib/inventory_builder/inventory.py ${IPS[@]}

DEBUG: Adding group all

DEBUG: Adding group kube_control_plane

DEBUG: Adding group kube_node

DEBUG: Adding group etcd

DEBUG: Adding group k8s_cluster

DEBUG: Adding group calico_rr

DEBUG: adding host node1 to group all

DEBUG: adding host node2 to group all

DEBUG: adding host node3 to group all

DEBUG: adding host node4 to group all

DEBUG: adding host node5 to group all

DEBUG: adding host node1 to group etcd

DEBUG: adding host node2 to group etcd

DEBUG: adding host node3 to group etcd

DEBUG: adding host node1 to group kube_control_plane

DEBUG: adding host node2 to group kube_control_plane

DEBUG: adding host node1 to group kube_node

DEBUG: adding host node2 to group kube_node

DEBUG: adding host node3 to group kube_node

DEBUG: adding host node4 to group kube_node

DEBUG: adding host node5 to group kube_node

修改all.yml

主要修改內容如下:

VIP與domain_name設定

需要Proxy的可以設定

(kubespray-venv) [root@ansible kubespray]# vim inventory/mycluster/group_vars/all/all.yml

apiserver_loadbalancer_domain_name: "k8s-apiserver-lb.jimmyhome.tw"

loadbalancer_apiserver:

address: 192.168.1.74

port: 7443

## Set these proxy values in order to update package manager and docker daemon to use proxies and custom CA for https_proxy if needed

# http_proxy: "http://x.x.x.x:3128"

# https_proxy: "http://x.x.x.x:3128"

# https_proxy_cert_file: ""

## Refer to roles/kubespray-defaults/defaults/main.yml before modifying no_proxy

# no_proxy: "127.0.0.0/8,10.0.0.0/8,172.16.0.0/12,192.168.0.0/16,cattle-system.svc,.svc,.cluster.local,k8s-apiserver-lb.jimmyhome.tw"

修改k8s-cluster.yml

主要修改內容如下:

要安裝的kubernetes版本

service ip

pod ip

network_node_prefix

容器引擎,我這裡使用docker

是否憑證自動更新(每個月月初,會自動更新)

(kubespray-venv) [root@ansible kubespray]# vim inventory/mycluster/group_vars/k8s_cluster/k8s-cluster.yml

## Change this to use another Kubernetes version, e.g. a current beta release

kube_version: v1.26.3

# Kubernetes internal network for services, unused block of space.

kube_service_addresses: 10.202.0.0/16

# internal network. When used, it will assign IP

# addresses from this range to individual pods.

# This network must be unused in your network infrastructure!

kube_pods_subnet: 10.201.0.0/16

kube_network_node_prefix: 16

## Container runtime

## docker for docker, crio for cri-o and containerd for containerd.

## Default: containerd

container_manager: docker

## Automatically renew K8S control plane certificates on first Monday of each month

auto_renew_certificates: true

修改addons.yml

主要修改內容如下:

安裝Nginx ingress

安裝helm工具

安裝metrics_server

(kubespray-venv) [root@ansible kubespray]# vim inventory/mycluster/group_vars/k8s_cluster/addons.yml

# Nginx ingress controller deployment

ingress_nginx_enabled: true

ingress_nginx_host_network: true

ingress_publish_status_address: ""

# ingress_nginx_nodeselector:

# kubernetes.io/os: "linux"

ingress_nginx_tolerations:

- key: "node-role.kubernetes.io/control-plane"

operator: "Equal"

value: ""

effect: "NoSchedule"

# Helm deployment

helm_enabled: true

# Metrics Server deployment

metrics_server_enabled: true

修改main.yml

主要修改內容如下:

網路組件calico版本

(kubespray-venv) [root@ansible kubespray]# vim roles/kubespray-defaults/vars/main.yml

calico_min_version_required: "v3.26.0"

# cri-docker版本修改位置,有需要再改,這裡就依這個版本

(kubespray-venv) [root@ansible kubespray]# vim roles/download/defaults/main/main.yml

cri_dockerd_version: 0.3.4

# docker版本修改位置,有需要再改,這裡就依這個版本

[root@ansible kubespray]# vim roles/container-engine/docker/defaults/main.yml

docker_version: '20.10'

修改hosts.yaml

主要修改內容如下:

依據現況去填寫

kube_control_plane -> master節點(會有不能調度污點)

kube_node -> worker節點

etcd -> 數據庫節點

(kubespray-venv) [root@ansible kubespray]# cat inventory/mycluster/hosts.yaml

all:

hosts:

k8s-master71u:

ansible_host: k8s-master71u

ip: 192.168.1.71

access_ip: 192.168.1.71

k8s-master72u:

ansible_host: k8s-master72u

ip: 192.168.1.72

access_ip: 192.168.1.72

k8s-master73u:

ansible_host: k8s-master73u

ip: 192.168.1.73

access_ip: 192.168.1.73

k8s-node75u:

ansible_host: k8s-node75u

ip: 192.168.1.75

access_ip: 192.168.1.75

k8s-node76u:

ansible_host: k8s-node76u

ip: 192.168.1.76

access_ip: 192.168.1.76

children:

kube_control_plane:

hosts:

k8s-master71u:

k8s-master72u:

k8s-master73u:

kube_node:

hosts:

k8s-node75u:

k8s-node76u:

etcd:

hosts:

k8s-master71u:

k8s-master72u:

k8s-master73u:

k8s_cluster:

children:

kube_control_plane:

kube_node:

calico_rr:

hosts: {}

安裝配置haproxy、keepalived

操作節點: 所有的master

注意:如果有兩個集群,都在同一網段內,/etc/keepalived/keepalived.conf裡面的virtual_router_id 60記得要不一樣,不然/var/log/message會一直報錯。

root@k8s-master71u:~# apt-get install keepalived haproxy -y

# 所有master節點執行,注意替換最後的master節點IP地址

root@k8s-master71u:~# cat /etc/haproxy/haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:7443

bind 127.0.0.1:7443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master71u 192.168.1.71:6443 check

server k8s-master72u 192.168.1.72:6443 check

server k8s-master73u 192.168.1.73:6443 check

# 在k8s-master71u節點,注意mcast_src_ip換成實際的master1ip地址,virtual_ipaddress換成lb地址,interface要替換成主機IP使用的介面

root@k8s-master71u:~# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens160

mcast_src_ip 192.168.1.71

virtual_router_id 64

priority 101

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.1.74

}

track_script {

chk_apiserver

}

}

# 在k8s-master72u和k8s-master73u分別創建/etc/keepalived/keepalived.conf,注意修改mcast_src_ip和virtual_ipaddress

#所有master節點配置KeepAlived健康檢查文件:

root@ck8s-p-4faj09-24:~# vim /etc/keepalived/check_apiserver.sh

#!/bin/bash

err=0

for k in $(seq 1 3)

do

check_code=$(pgrep haproxy)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

# 啟動haproxy和keepalived---->所有master節點

root@k8s-master71u:~# chmod +x /etc/keepalived/check_apiserver.sh

root@k8s-master71u:~# systemctl daemon-reload

root@k8s-master71u:~# systemctl enable --now haproxy

root@k8s-master71u:~# systemctl enable --now keepalived

root@k8s-master71u:~# systemctl restart haproxy

root@k8s-master71u:~# systemctl restart keepalived

root@k8s-master71u:~# systemctl status haproxy

root@k8s-master71u:~# systemctl status keepalived

# 測試lbip是否生效(從worker節點測試的)

root@k8s-master71u:~# telnet 192.168.1.74 7443

Trying 192.168.1.74...

Connected to 192.168.1.74.

Escape character is '^]'.

Connection closed by foreign host.

佈屬主機/etc/hosts填寫

(kubespray-venv) [root@ansible kubespray]# vim /etc/hosts

192.168.1.71 k8s-master71u

192.168.1.72 k8s-master72u

192.168.1.73 k8s-master73u

192.168.1.75 k8s-node75u

192.168.1.76 k8s-node76u

將key推送至要佈屬的主機

(kubespray-venv) [root@ansible kubespray]# ssh-copy-id k8s-master71u

(kubespray-venv) [root@ansible kubespray]# ssh-copy-id k8s-master72u

(kubespray-venv) [root@ansible kubespray]# ssh-copy-id k8s-master73u

(kubespray-venv) [root@ansible kubespray]# ssh-copy-id k8s-node75u

(kubespray-venv) [root@ansible kubespray]# ssh-copy-id k8s-node76u

開始佈屬

中間如果有突然報錯中斷,查看報錯訊息,調整一下,再次執行佈屬即可

(kubespray-venv) [root@ansible kubespray]# ansible-playbook -i inventory/mycluster/hosts.yaml --become --become-user=root cluster.yml

PLAY RECAP *****************************************************************************************************************************

k8s-master71u : ok=746 changed=145 unreachable=0 failed=0 skipped=1288 rescued=0 ignored=8

k8s-master72u : ok=643 changed=130 unreachable=0 failed=0 skipped=1138 rescued=0 ignored=3

k8s-master73u : ok=645 changed=131 unreachable=0 failed=0 skipped=1136 rescued=0 ignored=3

k8s-node75u : ok=467 changed=79 unreachable=0 failed=0 skipped=794 rescued=0 ignored=1

k8s-node76u : ok=467 changed=79 unreachable=0 failed=0 skipped=793 rescued=0 ignored=1

localhost : ok=3 changed=0 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

週日 03 十二月 2023 22:56:39 +0800 (0:00:01.997) 0:44:07.257 ****************

===============================================================================

download : Download_file | Download item -------------------------------------------------------------------------------------- 114.13s

container-engine/docker : Ensure docker packages are installed ----------------------------------------------------------------- 80.93s

download : Download_file | Download item --------------------------------------------------------------------------------------- 59.73s

download : Download_container | Download image if required --------------------------------------------------------------------- 50.88s

download : Download_container | Download image if required --------------------------------------------------------------------- 47.73s

kubernetes/preinstall : Preinstall | wait for the apiserver to be running ------------------------------------------------------ 46.30s

download : Download_file | Download item --------------------------------------------------------------------------------------- 44.36s

container-engine/crictl : Download_file | Download item ------------------------------------------------------------------------ 42.56s

kubernetes/preinstall : Install packages requirements -------------------------------------------------------------------------- 37.19s

kubernetes/control-plane : Joining control plane node to the cluster. ---------------------------------------------------------- 34.16s

container-engine/cri-dockerd : Download_file | Download item ------------------------------------------------------------------- 31.95s

download : Download_file | Download item --------------------------------------------------------------------------------------- 31.86s

download : Download_container | Download image if required --------------------------------------------------------------------- 30.03s

container-engine/validate-container-engine : Populate service facts ------------------------------------------------------------ 27.13s

kubernetes/control-plane : Kubeadm | Initialize first master ------------------------------------------------------------------- 26.53s

download : Download_container | Download image if required --------------------------------------------------------------------- 24.24s

download : Download_file | Download item --------------------------------------------------------------------------------------- 23.99s

download : Download_container | Download image if required --------------------------------------------------------------------- 23.34s

download : Download_container | Download image if required --------------------------------------------------------------------- 21.88s

kubernetes/kubeadm : Join to cluster ------------------------------------------------------------------------------------------- 21.07s

功能驗證

命令補全

# 命令補全

root@k8s-master71u:~# echo "source <(kubectl completion bash)" >> /etc/profile

root@k8s-master71u:~# source /etc/profile

/etc/hosts

root@k8s-master71u:~# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain

127.0.1.1 k8s-master71u

# The following lines are desirable for IPv6 capable hosts

::1 ip6-localhost ip6-loopback localhost6 localhost6.localdomain

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

# Ansible inventory hosts BEGIN

192.168.1.71 k8s-master71u.cluster.local k8s-master71u

192.168.1.72 k8s-master72u.cluster.local k8s-master72u

192.168.1.73 k8s-master73u.cluster.local k8s-master73u

192.168.1.75 k8s-node75u.cluster.local k8s-node75u

192.168.1.76 k8s-node76u.cluster.local k8s-node76u

# Ansible inventory hosts END

192.168.1.74 k8s-apiserver-lb.jimmyhome.tw

連線配置文件(config)

可以看到,都是用VIP的domain name, k8s-apiserver-lb.jimmyhome.tw

root@k8s-master71u:~# cat .kube/config

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUMvakNDQWVhZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJek1USXdNekUwTkRVME9Wb1hEVE16TVRFek1ERTBORFUwT1Zvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBTHovCmVJQmpqY2xCd3ZtTWV2YlhsaDV4ZW1HcjVxRlBlRkxsMGhQcERGaW1lcHFGREJkZWl0V0ppTmdGSUFVa3JzaE0KdkdtdXVjNy9mMGt5UUFGVFprTDBzUFI3QUlnbHlyeS9Hc0M1dzRZUEdYTHVpZmRoUGczM09QREdTSWNCejRYMAoyNU1aR1hEcWU2TzhTS2JyOFZidnpNVkxBK1JjbldQVTNIOGR2Znd6N2FxY0N3MlRwdFFxU1VnbVhzS1Z0ZTIzCmgrUGJDMW90OUU0UTYzYXFGQmRWei91aGJ4VHVFYm8wb1c5T3p3c2V1RTJLMzQ0RE1qZWxvZy9rMVdNeFA2OGwKYWRuOC9MbkdPNUJtTlp2UUZ6Q05kaXFkcm1YdXRubUk5N3BYY0dyN0tIdzYzYzZ2dTZ5aGZHMXNjRjg3WG40bQpLZmo5eDRDQ0cvVUJWc2RIV1lzQ0F3RUFBYU5aTUZjd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZFK0lJS2NBcTBLbVl3SUpFYjJDWER4VHFibXhNQlVHQTFVZEVRUU8KTUF5Q0NtdDFZbVZ5Ym1WMFpYTXdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBRWF3SmEwaEVCZmJ5VG1UazlORQoweGZYUm12SnYrOGpZSlVIZExENmkvYXppQnpKMUNPUlRKKzUxbVYrWmNIZFJWbDE3UkNOZDY4MU9RMkRFWkhWCi83aVphSGxvYldNM3cvUjAxSzdXZm4yQUJsQ09lVkU3d3RKOUc0VEMzK2FVNEhaZFhsdlNLSkt6Y0oxbjZ2V04KMjErbUp1UHdLQXlJTVZEYVB6OGx2QVhxbDR2elBGWTRUd1k1OWlORVZYaFBuUHBiOWhiUGVxTUtSMG80UFBTMgp2YmNlOGw5R016aVpEaEQxb0RDSXBacGE5VzVneXFYdDBTbXk3RVJBWkx2V1N3ZHVrVnJoMXcrWVh3TEFzajF6CkZhNGJKWDExOGdQT3JUVi8rYTNXZXVpYk5JOFNNZm5KSXhHTVduUkpEemVwYW1oUUdiZmtFT3BzdHpRQms2ZGoKd0QwPQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

server: https://k8s-apiserver-lb.jimmyhome.tw:7443

name: cluster.local

contexts:

- context:

cluster: cluster.local

user: kubernetes-admin

name: [email protected]

current-context: [email protected]

kind: Config

preferences: {}

users:

- name: kubernetes-admin

user:

client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURJVENDQWdtZ0F3SUJBZ0lJSjc2UTFQWXh4Zm93RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TXpFeU1ETXhORFExTkRsYUZ3MHlOREV5TURJeE5EUTFOVEphTURReApGekFWQmdOVkJBb1REbk41YzNSbGJUcHRZWE4wWlhKek1Sa3dGd1lEVlFRREV4QnJkV0psY201bGRHVnpMV0ZrCmJXbHVNSUlCSWpBTkJna3Foa2lHOXcwQkFRRUZBQU9DQVE4QU1JSUJDZ0tDQVFFQXVOK0pkMHE1T0xnWVlhaE0Kdnp4YTB0M2E5U2psdTZTZzFUWW5TbE5LRTBYeEQrOTErUmhpV0dUc2o3RlhhNmpLSkd2WE1FT1ZWUVN4UktrQQpwNE8ybDM5L3gyUDlPQ3dZWEovZEJCRFRIQUZBdWNzYWRoa0VjV3gvbnNVZWZQUE44cWxNV3YrN1B3clRzQUpFCmJBalZYSEVERVhDM3VVN2ZWM3lHaEJWVnRHZ29mOFdMdEluN3k3b2ozbnUxbldPY1ZVQ0dhQ09ncXNPdURERGQKVms0QUpyWnFWTWllUDAvWkk0YjhuMkJkZHREbUIyRVl5NmpsZ1M5eVIyYk5nVnNjaW0zMS9MTjQxWEI1YnViYQptbzAyWWIzcE5QN3VzYmtRZndNalhPZ1VrM3U2dks2Ky9hSzQ4WWhndUZvWi9wUVR2ejJtUUpKeldWU2dvYW9mCk5VTTdzUUlEQVFBQm8xWXdWREFPQmdOVkhROEJBZjhFQkFNQ0JhQXdFd1lEVlIwbEJBd3dDZ1lJS3dZQkJRVUgKQXdJd0RBWURWUjBUQVFIL0JBSXdBREFmQmdOVkhTTUVHREFXZ0JSUGlDQ25BS3RDcG1NQ0NSRzlnbHc4VTZtNQpzVEFOQmdrcWhraUc5dzBCQVFzRkFBT0NBUUVBbHQwNXJSVkhhc25GTjh6ZlJDUXVOT2hSKzhxNW5tRnNXU0pUCnFIeW92Uys2NFBkRzBJU21jSTYvanVhTmU1ZzhHdENkTlZoMmVSendLakxZZHc0RGZCUXBTTWh5UEYxZlFLYzEKMmthS2twTzh1MjQ4alZscitaQTV3Q2FaeWh3Qy8yTFFIdGxpdjZnWFNXTjZoNU5IUjNQdzhuWFBJMXQ5Q3JsegpUTCtQcFI3Ti9UUXczOWJqOUgrU0RuZTh2eVNoSVBCeXIzbzhSYXA4K3RMZVF6eVMwWWZNU1BTVnJKZjJMVFFwCnBhaWEyYVF5MVRUcXlicEQxODFsY2RzYzZxOU16cVlseE9zdE5QdG1ES3BTNFdsdFE3bDlLZVVLKzhtdmh0TWwKZXRVZ3lleXdPWmdIcUsrd0lLaC9aMzJScG1LODlub05vOHJWTHdvTGxkMjRFSXpDbmc9PQotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg==

client-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcEFJQkFBS0NBUUVBdU4rSmQwcTVPTGdZWWFoTXZ6eGEwdDNhOVNqbHU2U2cxVFluU2xOS0UwWHhEKzkxCitSaGlXR1RzajdGWGE2aktKR3ZYTUVPVlZRU3hSS2tBcDRPMmwzOS94MlA5T0N3WVhKL2RCQkRUSEFGQXVjc2EKZGhrRWNXeC9uc1VlZlBQTjhxbE1Xdis3UHdyVHNBSkViQWpWWEhFREVYQzN1VTdmVjN5R2hCVlZ0R2dvZjhXTAp0SW43eTdvajNudTFuV09jVlVDR2FDT2dxc091REREZFZrNEFKclpxVk1pZVAwL1pJNGI4bjJCZGR0RG1CMkVZCnk2amxnUzl5UjJiTmdWc2NpbTMxL0xONDFYQjVidWJhbW8wMlliM3BOUDd1c2JrUWZ3TWpYT2dVazN1NnZLNisKL2FLNDhZaGd1Rm9aL3BRVHZ6Mm1RSkp6V1ZTZ29hb2ZOVU03c1FJREFRQUJBb0lCQVFDY2J0SmtOYjk3SmhQRApkdVRTSU9EOWN5c1dyYStQVXVPZzVuemlvSTJhdDJFZXlkSjZuODUvMjQ1c25IUkxyZnkyU3VaQWViOS92RU8vCnhIM0FRV3ljenc4eGlnTTNwK0JKYUNCZGsxci9aSFAvZ3NQMlVINzQ5d1VhTk5QeWlWNm9TZWRKVFFHRmU4VGEKTjJEc1JhRTg0b2ZsRndydmE3VUMwMlVEbVFYM2E0YXBiMnVNRklMSnpHenJ4bmM0RDY1VHBwQUxwM2dYclo0YwpLRU5sU051dG03TVdza2JPUDB3UzJNNTk2U0R3LzNEQkQ5cVB4Y2ZsR0NyMGFDOFp6cGRUQnpiTjg4TzNORTVNClk3RFFsZHZaRHE0Q1h3QnhqSk9jN2Q1RE9VNWd2aHg5K1Y0NFZtWUNLMHV0UkFDaEliNC9lYlMweWw0U1ZMSnIKV0NUMDVwV1JBb0dCQU0xZEMzNkM5WVFZZDVDRng3Q2Q4TXlUUVY3NnppNXVlMUFiWmtPM1FRcmJ3QVR6UG9RdApsNm1vOUF6dVpHUnpreXVBU1AwRW5XbWQ1QWd3N3MybWphbTRDYndnK21xaFFpUHBYaTYyay8rVjlJdCtycDVVCjNyeldteGZxNnVWZ0lUREdBSloyckVpOVB4Q091RU1JdEw3VHJRbnBVTWVBZjZXbTdyb3VlVVQxQW9HQkFPWjEKR3ZHK0ZVVGxVL3d5WlJmOTRSOUlRQVBrM05tSHhua1JQUENtb2xXYUNNZWRmK2JvaVZlQnJka2NCQ2RRYW1TYwpReERBTW4wekVjMVQyT0E4d1BoTDJaYWd0L2FEU1dsS1RsdFF2SUNhUy94TUx3UmFGQXlJQkE3a3JOUk42TWdYCkViM093Z3FETmZkNXFIODRxMW1NbzVRVzBRVGNaL1VDb2ZLcEpNWk5Bb0dBVkJ1TWJwY0NLTVRBaTA5UE5yV28KL3BBODBNS1ZtUXlrc20xV1Y5dUE1d3FUUFRQR1llb3VXRTBiRHdTLzF5aENtU2xrTzBRZG1Ea1RRSXVSOG1ZSgpWUDVMOW1IblRhNlg0UTllQkhIQWNZZ2Y3TlhJZkk0ejMxRmhtYzBid1MrNnlEZi8yNS9rOWJHVVY1cXNPc0FoCkRwcXhId01RazNTOFVzTG91Ulg1a3RVQ2dZRUFrSGhCRitMTmVvODVBeFNrZzFISVdzLzBNWHk3WmpMVG5Qbk4KZGg5QURPR3ZOMVBvNWx4SUhPOVNpSlFqbG5HM0FMTms1NDlWRjE5NGZYdGVyZFBvTkw5My9CRnN3Y0N6dUttNApUVTVqblVzYzcyRGk2SnQvamd1R1g3L0RDS1IrbFZEQThuZzI5RmdrOEtyM2tpbDRZWDdrM09VZ3l5ZFFsQ3UrClVsenVqTkVDZ1lCZW1xaWowMjBXQVFkTWZnbnRtVk9keWVybC9IZmxhZDQwRkw2cU8wRytDRWtlSXdpZkRnVU8Kc3FIOWFRR1p3NzY4STZDUmJPYlhJMzZueHhtVXRQdFY0TllqTGFoU0lySGhEeVI5VGhvL2o3bnh3NnMyVXRwOQpLbVZlb1dtT3JGcS9LVGJHK055MU15dHpaUWhRYzJBV1oyamxDcDVaVm8ycGo0aWF1Q2I0K0E9PQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

節點Ready

root@k8s-master71u:~# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master71u Ready control-plane 12m v1.26.3 192.168.1.71 <none> Ubuntu 22.04.3 LTS 5.15.0-84-generic docker://20.10.20

k8s-master72u Ready control-plane 12m v1.26.3 192.168.1.72 <none> Ubuntu 22.04.3 LTS 5.15.0-84-generic docker://20.10.20

k8s-master73u Ready control-plane 12m v1.26.3 192.168.1.73 <none> Ubuntu 22.04.3 LTS 5.15.0-84-generic docker://20.10.20

k8s-node75u Ready <none> 11m v1.26.3 192.168.1.75 <none> Ubuntu 22.04.3 LTS 5.15.0-84-generic docker://20.10.20

k8s-node76u Ready <none> 10m v1.26.3 192.168.1.76 <none> Ubuntu 22.04.3 LTS 5.15.0-84-generic docker://20.10.20

master都有污點

root@k8s-master71u:~# kubectl get nodes k8s-master71u -o yaml | grep -i -A 2 taint

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

root@k8s-master71u:~# kubectl get nodes k8s-master72u -o yaml | grep -i -A 2 taint

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

root@k8s-master71u:~# kubectl get nodes k8s-master73u -o yaml | grep -i -A 2 taint

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/control-plane

helm有安裝

root@k8s-master71u:~# helm version

version.BuildInfo{Version:"v3.13.1", GitCommit:"3547a4b5bf5edb5478ce352e18858d8a552a4110", GitTreeState:"clean", GoVersion:"go1.20.8"}

所有POD都Running

root@k8s-master71u:~# kubectl get pod -A -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx ingress-nginx-controller-46rbx 1/1 Running 0 8m16s 192.168.1.75 k8s-node75u <none> <none>

ingress-nginx ingress-nginx-controller-7ccdp 1/1 Running 0 8m16s 192.168.1.72 k8s-master72u <none> <none>

ingress-nginx ingress-nginx-controller-fqvxb 1/1 Running 0 8m16s 192.168.1.71 k8s-master71u <none> <none>

ingress-nginx ingress-nginx-controller-hjbv7 1/1 Running 0 8m16s 192.168.1.73 k8s-master73u <none> <none>

ingress-nginx ingress-nginx-controller-hlkh8 1/1 Running 0 8m16s 192.168.1.76 k8s-node76u <none> <none>

kube-system calico-kube-controllers-777ff6cddb-d4htx 1/1 Running 0 8m31s 10.201.14.129 k8s-node75u <none> <none>

kube-system calico-node-4qhfj 1/1 Running 0 9m56s 192.168.1.72 k8s-master72u <none> <none>

kube-system calico-node-9wrql 1/1 Running 0 9m56s 192.168.1.76 k8s-node76u <none> <none>

kube-system calico-node-9xzmn 1/1 Running 0 9m56s 192.168.1.75 k8s-node75u <none> <none>

kube-system calico-node-m975z 1/1 Running 0 9m56s 192.168.1.73 k8s-master73u <none> <none>

kube-system calico-node-s9w4x 1/1 Running 0 9m56s 192.168.1.71 k8s-master71u <none> <none>

kube-system coredns-57c7559cc8-sq8c4 1/1 Running 0 7m39s 10.201.96.1 k8s-master73u <none> <none>

kube-system coredns-57c7559cc8-vrf7g 1/1 Running 0 7m47s 10.201.133.1 k8s-master71u <none> <none>

kube-system dns-autoscaler-9875c9b44-9x7cg 1/1 Running 0 7m43s 10.201.126.1 k8s-master72u <none> <none>

kube-system kube-apiserver-k8s-master71u 1/1 Running 1 (3m18s ago) 13m 192.168.1.71 k8s-master71u <none> <none>

kube-system kube-apiserver-k8s-master72u 1/1 Running 1 (3m18s ago) 12m 192.168.1.72 k8s-master72u <none> <none>

kube-system kube-apiserver-k8s-master73u 1/1 Running 1 (3m18s ago) 11m 192.168.1.73 k8s-master73u <none> <none>

kube-system kube-controller-manager-k8s-master71u 1/1 Running 2 (3m49s ago) 13m 192.168.1.71 k8s-master71u <none> <none>

kube-system kube-controller-manager-k8s-master72u 1/1 Running 2 (3m49s ago) 12m 192.168.1.72 k8s-master72u <none> <none>

kube-system kube-controller-manager-k8s-master73u 1/1 Running 2 (3m49s ago) 12m 192.168.1.73 k8s-master73u <none> <none>

kube-system kube-proxy-6rz9s 1/1 Running 0 11m 192.168.1.76 k8s-node76u <none> <none>

kube-system kube-proxy-jqvw9 1/1 Running 0 11m 192.168.1.75 k8s-node75u <none> <none>

kube-system kube-proxy-mtclv 1/1 Running 0 11m 192.168.1.73 k8s-master73u <none> <none>

kube-system kube-proxy-rsv52 1/1 Running 0 11m 192.168.1.71 k8s-master71u <none> <none>

kube-system kube-proxy-vmnwj 1/1 Running 0 11m 192.168.1.72 k8s-master72u <none> <none>

kube-system kube-scheduler-k8s-master71u 1/1 Running 1 13m 192.168.1.71 k8s-master71u <none> <none>

kube-system kube-scheduler-k8s-master72u 1/1 Running 1 12m 192.168.1.72 k8s-master72u <none> <none>

kube-system kube-scheduler-k8s-master73u 1/1 Running 2 (3m34s ago) 11m 192.168.1.73 k8s-master73u <none> <none>

kube-system metrics-server-694db59894-4hfz5 1/1 Running 0 6m19s 10.201.255.193 k8s-node76u <none> <none>

kube-system nodelocaldns-5kb8v 1/1 Running 0 7m40s 192.168.1.72 k8s-master72u <none> <none>

kube-system nodelocaldns-894wn 1/1 Running 0 7m40s 192.168.1.71 k8s-master71u <none> <none>

kube-system nodelocaldns-lkmlx 1/1 Running 0 7m40s 192.168.1.75 k8s-node75u <none> <none>

kube-system nodelocaldns-q9q9s 1/1 Running 0 7m40s 192.168.1.73 k8s-master73u <none> <none>

kube-system nodelocaldns-qgrqs 1/1 Running 0 7m40s 192.168.1.76 k8s-node76u <none> <none>

佈屬kubernetes的yaml

root@k8s-master71u:~# ll /etc/kubernetes/

total 412

drwxr-xr-x 5 kube root 4096 Dec 3 14:51 ./

drwxr-xr-x 105 root root 8192 Dec 3 14:44 ../

drwxr-xr-x 4 root root 49 Dec 3 14:52 addons/

-rw------- 1 root root 5669 Dec 3 14:45 admin.conf

-rw-r--r-- 1 root root 1290 Dec 3 14:49 calico-config.yml

-rw-r--r-- 1 root root 550 Dec 3 14:49 calico-crb.yml

-rw-r--r-- 1 root root 4227 Dec 3 14:49 calico-cr.yml

-rw-r--r-- 1 root root 159 Dec 3 14:49 calico-ipamconfig.yml

-rw-r--r-- 1 root root 1581 Dec 3 14:50 calico-kube-controllers.yml

-rw-r--r-- 1 root root 301 Dec 3 14:50 calico-kube-crb.yml

-rw-r--r-- 1 root root 1733 Dec 3 14:50 calico-kube-cr.yml

-rw-r--r-- 1 root root 107 Dec 3 14:50 calico-kube-sa.yml

-rw-r--r-- 1 root root 197 Dec 3 14:49 calico-node-sa.yml

-rw-r--r-- 1 root root 11091 Dec 3 14:49 calico-node.yml

-rw------- 1 root root 5701 Dec 3 14:47 controller-manager.conf

-rw------- 1 root root 5684 Dec 3 14:45 'controller-manager.conf.15377.2023-12-03@14:47:03~'

-rw-r--r-- 1 root root 451 Dec 3 14:51 coredns-clusterrolebinding.yml

-rw-r--r-- 1 root root 473 Dec 3 14:51 coredns-clusterrole.yml

-rw-r--r-- 1 root root 601 Dec 3 14:51 coredns-config.yml

-rw-r--r-- 1 root root 3130 Dec 3 14:51 coredns-deployment.yml

-rw-r--r-- 1 root root 190 Dec 3 14:51 coredns-sa.yml

-rw-r--r-- 1 root root 591 Dec 3 14:51 coredns-svc.yml

-rw-r--r-- 1 root root 959 Dec 3 14:51 dns-autoscaler-clusterrolebinding.yml

-rw-r--r-- 1 root root 1150 Dec 3 14:51 dns-autoscaler-clusterrole.yml

-rw-r--r-- 1 root root 763 Dec 3 14:51 dns-autoscaler-sa.yml

-rw-r--r-- 1 root root 2569 Dec 3 14:51 dns-autoscaler.yml

-rw-r--r-- 1 root root 219969 Dec 3 14:49 kdd-crds.yml

-rw-r----- 1 root root 3906 Dec 3 14:45 kubeadm-config.yaml

-rw-r--r-- 1 root root 469 Dec 3 14:26 kubeadm-images.yaml

-rw------- 1 root root 2017 Dec 3 14:46 kubelet.conf

-rw------- 1 root root 793 Dec 3 14:45 kubelet-config.yaml

-rw------- 1 root root 513 Dec 3 14:44 kubelet.env

-rw-r--r-- 1 root root 113 Dec 3 14:49 kubernetes-services-endpoint.yml

-rw-r--r-- 1 root root 199 Dec 3 14:45 kubescheduler-config.yaml

drwxr-xr-x 2 kube root 96 Dec 3 14:45 manifests/

-rw-r----- 1 root root 408 Dec 3 14:47 node-crb.yml

-rw-r--r-- 1 root root 1035 Dec 3 14:51 nodelocaldns-config.yml

-rw-r--r-- 1 root root 2661 Dec 3 14:51 nodelocaldns-daemonset.yml

-rw-r--r-- 1 root root 149 Dec 3 14:51 nodelocaldns-sa.yml

lrwxrwxrwx 1 root root 19 Dec 3 14:14 pki -> /etc/kubernetes/ssl/

-rw------- 1 root root 5649 Dec 3 14:47 scheduler.conf

-rw------- 1 root root 5632 Dec 3 14:45 'scheduler.conf.15389.2023-12-03@14:47:04~'

drwxr-xr-x 2 root root 4096 Dec 3 14:45 ssl/

master組件yaml

root@k8s-master71u:~# ll /etc/kubernetes/manifests/

total 20

drwxr-xr-x 2 kube root 96 Dec 3 14:45 ./

drwxr-xr-x 5 kube root 4096 Dec 3 14:51 ../

-rw------- 1 root root 4727 Dec 3 14:45 kube-apiserver.yaml

-rw------- 1 root root 3757 Dec 3 14:45 kube-controller-manager.yaml

-rw------- 1 root root 1698 Dec 3 14:45 kube-scheduler.yaml

root@k8s-master71u:~# calicoctl get ippool -o wide

NAME CIDR NAT IPIPMODE VXLANMODE DISABLED DISABLEBGPEXPORT SELECTOR

default-pool 10.201.0.0/16 true Never Always false false all()

測試創建POD

root@k8s-master71u:~# kubectl run test-nginx --image=nginx:alpine

pod/test-nginx created

root@k8s-master71u:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test-nginx 1/1 Running 0 10s 10.201.14.130 k8s-node75u <none> <none>

root@k8s-master71u:~# curl 10.201.14.130

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

測試Deployment、Service、Ingress

root@k8s-master71u:~# cat deployment2.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: web2

name: web2

spec:

replicas: 3

selector:

matchLabels:

app: web2

template:

metadata:

labels:

app: web2

spec:

containers:

- image: nginx

name: nginx

root@k8s-master71u:~# kubectl apply -f deployment2.yaml

root@k8s-master71u:~# cat service2.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: web2

name: web2

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: web2

type: NodePort

root@k8s-master71u:~# kubectl apply -f service2.yaml

root@k8s-master71u:~# cat ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: web2

spec:

ingressClassName: nginx

rules:

- host: web2.jimmyhome.tw

http:

paths:

- backend:

service:

name: web2

port:

number: 80

path: /

pathType: Prefix

root@k8s-master71u:~# kubectl apply -f ingress.yaml

root@k8s-master71u:~# kubectl get pod,svc,ingress -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/test-nginx 1/1 Running 0 3m1s 10.201.14.130 k8s-node75u <none> <none>

pod/web2-5d48fb75c5-dt5xd 1/1 Running 0 113s 10.201.255.195 k8s-node76u <none> <none>

pod/web2-5d48fb75c5-ggmrz 1/1 Running 0 113s 10.201.14.131 k8s-node75u <none> <none>

pod/web2-5d48fb75c5-jsvck 1/1 Running 0 113s 10.201.255.194 k8s-node76u <none> <none>

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.202.0.1 <none> 443/TCP 22m <none>

service/web2 NodePort 10.202.72.45 <none> 80:31568/TCP 82s app=web2

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/web2 nginx web2.jimmyhome.tw 80 56s

以下都可以訪問nodeport http://192.168.1.71:31568/ http://192.168.1.72:31568/ http://192.168.1.73:31568/ http://192.168.1.74:31568/ http://192.168.1.75:31568/ http://192.168.1.76:31568/

domain name也可以訪問,透過ingress->service->pod

chenqingze@chenqingze-MBP ~ % sudo vim /etc/hosts

192.168.1.75 web2.jimmyhome.tw

etcd服務

root@k8s-master71u:~# systemctl status etcd.service

● etcd.service - etcd

Loaded: loaded (/etc/systemd/system/etcd.service; enabled; vendor preset: enabled)

Active: active (running) since Sun 2023-12-03 14:43:39 UTC; 28min ago

Main PID: 13409 (etcd)

Tasks: 14 (limit: 9387)

Memory: 117.0M

CPU: 51.716s

CGroup: /system.slice/etcd.service

└─13409 /usr/local/bin/etcd

Dec 03 14:55:55 k8s-master71u etcd[13409]: {"level":"info","ts":"2023-12-03T14:55:55.63607Z","caller":"mvcc/hash.go:137","msg":"storing new hash","hash":1201138327,"re>

Dec 03 15:06:09 k8s-master71u etcd[13409]: {"level":"info","ts":"2023-12-03T15:06:09.854317Z","caller":"mvcc/index.go:214","msg":"compact tree index","revision":3724}

Dec 03 15:06:09 k8s-master71u etcd[13409]: {"level":"info","ts":"2023-12-03T15:06:09.942986Z","caller":"mvcc/kvstore_compaction.go:66","msg":"finished scheduled compac>

Dec 03 15:06:09 k8s-master71u etcd[13409]: {"level":"info","ts":"2023-12-03T15:06:09.943036Z","caller":"mvcc/hash.go:137","msg":"storing new hash","hash":2096861132,"r>

Dec 03 15:07:01 k8s-master71u etcd[13409]: {"level":"warn","ts":"2023-12-03T15:07:01.091549Z","caller":"etcdserver/util.go:170","msg":"apply request took too long","to>

Dec 03 15:07:01 k8s-master71u etcd[13409]: {"level":"info","ts":"2023-12-03T15:07:01.091613Z","caller":"traceutil/trace.go:171","msg":"trace[2099372944] range","detail>

Dec 03 15:07:02 k8s-master71u etcd[13409]: {"level":"info","ts":"2023-12-03T15:07:02.663992Z","caller":"traceutil/trace.go:171","msg":"trace[1476779153] transaction",">

Dec 03 15:11:09 k8s-master71u etcd[13409]: {"level":"info","ts":"2023-12-03T15:11:09.86509Z","caller":"mvcc/index.go:214","msg":"compact tree index","revision":4506}

Dec 03 15:11:09 k8s-master71u etcd[13409]: {"level":"info","ts":"2023-12-03T15:11:09.890206Z","caller":"mvcc/kvstore_compaction.go:66","msg":"finished scheduled compac>

Dec 03 15:11:09 k8s-master71u etcd[13409]: {"level":"info","ts":"2023-12-03T15:11:09.890258Z","caller":"mvcc/hash.go:137","msg":"storing new hash","hash":2253005372,"r>

cri-dockerd服務

root@k8s-master71u:~# systemctl status cri-dockerd

● cri-dockerd.service - CRI Interface for Docker Application Container Engine

Loaded: loaded (/etc/systemd/system/cri-dockerd.service; enabled; vendor preset: enabled)

Active: active (running) since Sun 2023-12-03 14:25:01 UTC; 46min ago

TriggeredBy: ● cri-dockerd.socket

Docs: https://docs.mirantis.com

Main PID: 8096 (cri-dockerd)

Tasks: 14

Memory: 19.4M

CPU: 38.327s

CGroup: /system.slice/cri-dockerd.service

└─8096 /usr/local/bin/cri-dockerd --container-runtime-endpoint unix:///var/run/cri-dockerd.sock --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin ->

Dec 03 14:51:41 k8s-master71u cri-dockerd[8096]: 2023-12-03 14:51:41.774 [INFO][21767] dataplane_linux.go 473: Disabling IPv4 forwarding ContainerID="b05ee1aac5d857271>

Dec 03 14:51:41 k8s-master71u cri-dockerd[8096]: 2023-12-03 14:51:41.804 [INFO][21767] k8s.go 411: Added Mac, interface name, and active container ID to endpoint Conta>

Dec 03 14:51:41 k8s-master71u cri-dockerd[8096]: 2023-12-03 14:51:41.825 [INFO][21767] k8s.go 489: Wrote updated endpoint to datastore ContainerID="b05ee1aac5d85727107>

Dec 03 14:51:41 k8s-master71u cri-dockerd[8096]: time="2023-12-03T14:51:41Z" level=info msg="Pulling image registry.k8s.io/ingress-nginx/controller:v1.9.4: 05535d57e64>

Dec 03 14:51:51 k8s-master71u cri-dockerd[8096]: time="2023-12-03T14:51:51Z" level=info msg="Pulling image registry.k8s.io/ingress-nginx/controller:v1.9.4: 3da4cd537b4>

Dec 03 14:51:52 k8s-master71u cri-dockerd[8096]: time="2023-12-03T14:51:52Z" level=info msg="Will attempt to re-write config file /var/lib/docker/containers/e5ebeb0472>

Dec 03 14:51:53 k8s-master71u cri-dockerd[8096]: time="2023-12-03T14:51:53Z" level=info msg="Stop pulling image registry.k8s.io/ingress-nginx/controller:v1.9.4: Status>

Dec 03 14:55:33 k8s-master71u cri-dockerd[8096]: time="2023-12-03T14:55:33Z" level=info msg="Docker cri received runtime config &RuntimeConfig{NetworkConfig:&NetworkCo>

Dec 03 14:55:37 k8s-master71u cri-dockerd[8096]: time="2023-12-03T14:55:37Z" level=info msg="Will attempt to re-write config file /var/lib/docker/containers/f23d464928>

Dec 03 14:56:08 k8s-master71u cri-dockerd[8096]: time="2023-12-03T14:56:08Z" level=info msg="Will attempt to re-write config file /var/lib/docker/containers/651f69131d>

kubelet服務

root@k8s-master71u:~# systemctl status kubelet

● kubelet.service - Kubernetes Kubelet Server

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; vendor preset: enabled)

Active: active (running) since Sun 2023-12-03 14:55:33 UTC; 16min ago

Docs: https://github.com/GoogleCloudPlatform/kubernetes

Main PID: 27162 (kubelet)

Tasks: 16 (limit: 9387)

Memory: 42.9M

CPU: 23.201s

CGroup: /system.slice/kubelet.service

└─27162 /usr/local/bin/kubelet --v=2 --node-ip=192.168.1.71 --hostname-override=k8s-master71u --bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.con>

Dec 03 15:11:31 k8s-master71u kubelet[27162]: E1203 15:11:31.892549 27162 cri_stats_provider.go:643] "Unable to fetch container log stats" err="failed to get fsstats>

Dec 03 15:11:33 k8s-master71u kubelet[27162]: I1203 15:11:33.187859 27162 kubelet_getters.go:182] "Pod status updated" pod="kube-system/kube-apiserver-k8s-master71u">

Dec 03 15:11:33 k8s-master71u kubelet[27162]: I1203 15:11:33.187909 27162 kubelet_getters.go:182] "Pod status updated" pod="kube-system/kube-controller-manager-k8s-m>

Dec 03 15:11:33 k8s-master71u kubelet[27162]: I1203 15:11:33.187921 27162 kubelet_getters.go:182] "Pod status updated" pod="kube-system/kube-scheduler-k8s-master71u">

Dec 03 15:11:42 k8s-master71u kubelet[27162]: E1203 15:11:42.968925 27162 cri_stats_provider.go:643] "Unable to fetch container log stats" err="failed to get fsstats>

Dec 03 15:11:42 k8s-master71u kubelet[27162]: E1203 15:11:42.969222 27162 cri_stats_provider.go:643] "Unable to fetch container log stats" err="failed to get fsstats>

Dec 03 15:11:54 k8s-master71u kubelet[27162]: E1203 15:11:54.050355 27162 cri_stats_provider.go:643] "Unable to fetch container log stats" err="failed to get fsstats>

Dec 03 15:11:54 k8s-master71u kubelet[27162]: E1203 15:11:54.050462 27162 cri_stats_provider.go:643] "Unable to fetch container log stats" err="failed to get fsstats>

Dec 03 15:12:05 k8s-master71u kubelet[27162]: E1203 15:12:05.124399 27162 cri_stats_provider.go:643] "Unable to fetch container log stats" err="failed to get fsstats>

Dec 03 15:12:05 k8s-master71u kubelet[27162]: E1203 15:12:05.124943 27162 cri_stats_provider.go:643] "Unable to fetch container log stats" err="failed to get fsstats>

憑證與金鑰

root@k8s-master71u:~# cd /etc/kubernetes/pki

root@k8s-master71u:/etc/kubernetes/pki# ll

total 56

drwxr-xr-x 2 root root 4096 Dec 3 14:45 ./

drwxr-xr-x 5 kube root 4096 Dec 3 14:51 ../

-rw-r--r-- 1 root root 1428 Dec 3 14:45 apiserver.crt

-rw------- 1 root root 1679 Dec 3 14:45 apiserver.key

-rw-r--r-- 1 root root 1164 Dec 3 14:45 apiserver-kubelet-client.crt

-rw------- 1 root root 1679 Dec 3 14:45 apiserver-kubelet-client.key

-rw-r--r-- 1 root root 1099 Dec 3 14:45 ca.crt

-rw------- 1 root root 1675 Dec 3 14:45 ca.key

-rw-r--r-- 1 root root 1115 Dec 3 14:45 front-proxy-ca.crt

-rw------- 1 root root 1675 Dec 3 14:45 front-proxy-ca.key

-rw-r--r-- 1 root root 1119 Dec 3 14:45 front-proxy-client.crt

-rw------- 1 root root 1675 Dec 3 14:45 front-proxy-client.key

-rw------- 1 root root 1679 Dec 3 14:45 sa.key

-rw------- 1 root root 451 Dec 3 14:45 sa.pub

root@k8s-master71u:/etc/kubernetes/pki# for i in $(ls *.crt); do echo "===== $i ====="; openssl x509 -in $i -text -noout | grep -A 3 'Validity' ; done

===== apiserver.crt =====

Validity

Not Before: Dec 3 14:45:49 2023 GMT

Not After : Dec 2 14:45:50 2024 GMT

Subject: CN = kube-apiserver

===== apiserver-kubelet-client.crt =====

Validity

Not Before: Dec 3 14:45:49 2023 GMT

Not After : Dec 2 14:45:50 2024 GMT

Subject: O = system:masters, CN = kube-apiserver-kubelet-client

===== ca.crt =====

Validity

Not Before: Dec 3 14:45:49 2023 GMT

Not After : Nov 30 14:45:49 2033 GMT

Subject: CN = kubernetes

===== front-proxy-ca.crt =====

Validity

Not Before: Dec 3 14:45:50 2023 GMT

Not After : Nov 30 14:45:50 2033 GMT

Subject: CN = front-proxy-ca

===== front-proxy-client.crt =====

Validity

Not Before: Dec 3 14:45:50 2023 GMT

Not After : Dec 2 14:45:51 2024 GMT

Subject: CN = front-proxy-client

etcd憑證與金鑰

root@k8s-master71u:/etc/kubernetes/pki# cd /etc/ssl/etcd/ssl/

root@k8s-master71u:/etc/ssl/etcd/ssl# ll

total 84

drwx------ 2 etcd root 4096 Dec 3 14:42 ./

drwx------ 3 etcd root 37 Dec 3 14:42 ../

-rwx------ 1 etcd root 1704 Dec 3 14:42 admin-k8s-master71u-key.pem*

-rwx------ 1 etcd root 1476 Dec 3 14:42 admin-k8s-master71u.pem*

-rwx------ 1 etcd root 1704 Dec 3 14:42 admin-k8s-master72u-key.pem*

-rwx------ 1 etcd root 1476 Dec 3 14:42 admin-k8s-master72u.pem*

-rwx------ 1 etcd root 1708 Dec 3 14:42 admin-k8s-master73u-key.pem*

-rwx------ 1 etcd root 1476 Dec 3 14:42 admin-k8s-master73u.pem*

-rwx------ 1 etcd root 1704 Dec 3 14:42 ca-key.pem*

-rwx------ 1 etcd root 1111 Dec 3 14:42 ca.pem*

-rwx------ 1 etcd root 1704 Dec 3 14:42 member-k8s-master71u-key.pem*

-rwx------ 1 etcd root 1480 Dec 3 14:42 member-k8s-master71u.pem*

-rwx------ 1 etcd root 1704 Dec 3 14:42 member-k8s-master72u-key.pem*

-rwx------ 1 etcd root 1480 Dec 3 14:42 member-k8s-master72u.pem*

-rwx------ 1 etcd root 1704 Dec 3 14:42 member-k8s-master73u-key.pem*

-rwx------ 1 etcd root 1480 Dec 3 14:42 member-k8s-master73u.pem*

-rwx------ 1 etcd root 1704 Dec 3 14:42 node-k8s-master71u-key.pem*

-rwx------ 1 etcd root 1476 Dec 3 14:42 node-k8s-master71u.pem*

-rwx------ 1 etcd root 1704 Dec 3 14:42 node-k8s-master72u-key.pem*

-rwx------ 1 etcd root 1476 Dec 3 14:42 node-k8s-master72u.pem*

-rwx------ 1 etcd root 1704 Dec 3 14:42 node-k8s-master73u-key.pem*

-rwx------ 1 etcd root 1476 Dec 3 14:42 node-k8s-master73u.pem*

root@k8s-master71u:/etc/ssl/etcd/ssl# for i in $(ls *.pem | egrep -v "key"); do echo "===== $i ====="; openssl x509 -in $i -text -noout | grep -A 3 'Validity' ; done

===== admin-k8s-master71u.pem =====

Validity

Not Before: Dec 3 14:42:05 2023 GMT

Not After : Nov 9 14:42:05 2123 GMT

Subject: CN = etcd-admin-k8s-master71u

===== admin-k8s-master72u.pem =====

Validity

Not Before: Dec 3 14:42:06 2023 GMT

Not After : Nov 9 14:42:06 2123 GMT

Subject: CN = etcd-admin-k8s-master72u

===== admin-k8s-master73u.pem =====

Validity

Not Before: Dec 3 14:42:07 2023 GMT

Not After : Nov 9 14:42:07 2123 GMT

Subject: CN = etcd-admin-k8s-master73u

===== ca.pem =====

Validity

Not Before: Dec 3 14:42:05 2023 GMT

Not After : Nov 9 14:42:05 2123 GMT

Subject: CN = etcd-ca

===== member-k8s-master71u.pem =====

Validity

Not Before: Dec 3 14:42:05 2023 GMT

Not After : Nov 9 14:42:05 2123 GMT

Subject: CN = etcd-member-k8s-master71u

===== member-k8s-master72u.pem =====

Validity

Not Before: Dec 3 14:42:06 2023 GMT

Not After : Nov 9 14:42:06 2123 GMT

Subject: CN = etcd-member-k8s-master72u

===== member-k8s-master73u.pem =====

Validity

Not Before: Dec 3 14:42:06 2023 GMT

Not After : Nov 9 14:42:06 2123 GMT

Subject: CN = etcd-member-k8s-master73u

===== node-k8s-master71u.pem =====

Validity

Not Before: Dec 3 14:42:07 2023 GMT

Not After : Nov 9 14:42:07 2123 GMT

Subject: CN = etcd-node-k8s-master71u

===== node-k8s-master72u.pem =====

Validity

Not Before: Dec 3 14:42:07 2023 GMT

Not After : Nov 9 14:42:07 2123 GMT

Subject: CN = etcd-node-k8s-master72u

===== node-k8s-master73u.pem =====

Validity

Not Before: Dec 3 14:42:07 2023 GMT

Not After : Nov 9 14:42:07 2123 GMT

Subject: CN = etcd-node-k8s-master73u

安裝rancher管理平台(高可用)

詳細操作可以參考我這篇: https://blog.goldfishbrain-fighting.com/2023/kubernetes-helm/

一、添加 Helm Chart 倉庫

root@k8s-master71u:~# helm repo add rancher-stable https://releases.rancher.com/server-charts/stable

"rancher-stable" has been added to your repositories

二、為 Rancher 建立命名空間

root@k8s-master71u:~# kubectl create namespace cattle-system

三、選擇 SSL 配置

Rancher 產生的憑證(預設) 需要 cert-manager

四、安裝 cert-manager

# 如果你手動安裝了CRD,而不是在 Helm 安裝命令中添加了 '--set installCRDs=true' 選項,你應該在升級 Helm Chart 之前升級 CRD 資源。

root@k8s-master71u:~# kubectl apply -f https://github.com/cert-manager/cert-manager/releases/download/v1.11.0/cert-manager.crds.yaml

# 添加 Jetstack Helm 倉庫

root@k8s-master71u:~# helm repo add jetstack https://charts.jetstack.io

# 更新本地 Helm Chart 倉庫緩存

root@k8s-master71u:~# helm repo update

Hang tight while we grab the latest from your chart repositories...

...Successfully got an update from the "jetstack" chart repository

...Successfully got an update from the "rancher-stable" chart repository

Update Complete. ⎈Happy Helming!⎈

# 安裝 cert-manager Helm Chart

root@k8s-master71u:~# helm install cert-manager jetstack/cert-manager \

--namespace cert-manager \

--create-namespace \

--version v1.11.0

# 安裝完 cert-manager 后,你可以通過檢查 cert-manager 命名空間中正在運行的 Pod 來驗證它是否已正確部署

root@k8s-master71u:~# kubectl get pods --namespace cert-manager

NAME READY STATUS RESTARTS AGE

cert-manager-64f9f45d6f-vklzz 1/1 Running 0 37s

cert-manager-cainjector-56bbdd5c47-wv2l7 1/1 Running 0 37s

cert-manager-webhook-d4f4545d7-pw2cp 1/1 Running 0 37s

五、根據你選擇的證書選項,通過 Helm 安裝 Rancher

採Rancher 生成的證書方式

Rancher Helm Chart 選項: https://ranchermanager.docs.rancher.com/zh/getting-started/installation-and-upgrade/installation-references/helm-chart-options

需多指定ingressClassName為nginx

root@k8s-master71u:~# helm install rancher rancher-stable/rancher \

--namespace cattle-system \

--set hostname=rancher.jimmyhome.tw \

--set bootstrapPassword=admin \

--set ingress.ingressClassName=nginx

NAME: rancher

LAST DEPLOYED: Sun Dec 3 15:40:43 2023

NAMESPACE: cattle-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Rancher Server has been installed.

NOTE: Rancher may take several minutes to fully initialize. Please standby while Certificates are being issued, Containers are started and the Ingress rule comes up.

Check out our docs at https://rancher.com/docs/

If you provided your own bootstrap password during installation, browse to https://rancher.jimmyhome.tw to get started.

If this is the first time you installed Rancher, get started by running this command and clicking the URL it generates:

echo https://rancher.jimmyhome.tw/dashboard/?setup=$(kubectl get secret --namespace cattle-system bootstrap-secret -o go-template='{{.data.bootstrapPassword|base64decode}}')

To get just the bootstrap password on its own, run:

kubectl get secret --namespace cattle-system bootstrap-secret -o go-template='{{.data.bootstrapPassword|base64decode}}{{ "\n" }}'

Happy Containering!

# 等待 Rancher 運行

root@k8s-master71u:~# kubectl -n cattle-system rollout status deploy/rancher

Waiting for deployment "rancher" rollout to finish: 0 of 3 updated replicas are available...

Waiting for deployment "rancher" rollout to finish: 1 of 3 updated replicas are available...

Waiting for deployment "rancher" rollout to finish: 2 of 3 updated replicas are available...

deployment "rancher" successfully rolled out

六、驗證 Rancher Server 是否部署成功

root@k8s-master71u:~# kubectl -n cattle-system get deploy

NAME READY UP-TO-DATE AVAILABLE AGE

rancher 3/3 3 3 5m2s

root@k8s-master71u:~# kubectl -n cattle-system get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

helm-operation-hpd69 2/2 Running 0 40s 10.201.14.138 k8s-node75u <none> <none>

rancher-64cf6ddd96-pcfv5 1/1 Running 0 5m11s 10.201.255.201 k8s-node76u <none> <none>

rancher-64cf6ddd96-q7dhp 1/1 Running 0 5m11s 10.201.255.202 k8s-node76u <none> <none>

rancher-64cf6ddd96-rr28z 1/1 Running 0 5m11s 10.201.14.137 k8s-node75u <none> <none>

root@k8s-master71u:~# kubectl -n cattle-system get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

rancher nginx rancher.jimmyhome.tw 80, 443 5m35s

# 我自己的筆電,先設定/etc/hosts測試網頁訪問

# 如是內部有dns server的,就到dns server設定a record解析

chenqingze@chenqingze-MBP ~ % sudo vim /etc/hosts

192.168.1.75 rancher.jimmyhome.tw

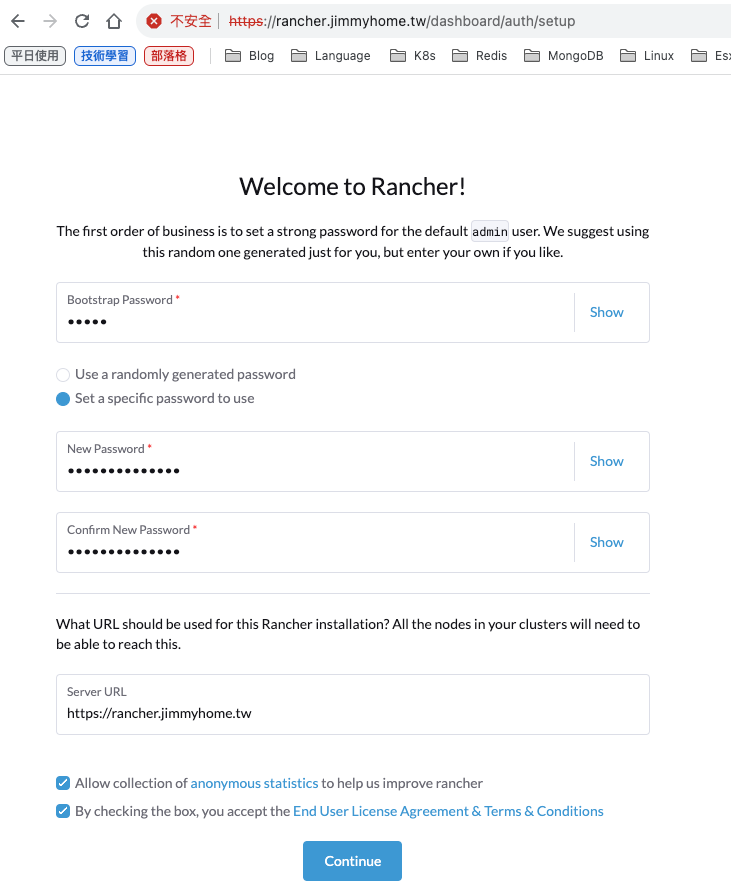

打 https://rancher.jimmyhome.tw 可以看到網頁

由上方helm install rancher完成時,可以看到以下兩個指令,可以獲取到預設admin帳號的密碼

# If this is the first time you installed Rancher, get started by running this command and clicking the URL it generates

root@k8s-master71u:~# echo https://rancher.jimmyhome.tw/dashboard/?setup=$(kubectl get secret --namespace cattle-system bootstrap-secret -o go-template='{{.data.bootstrapPassword|base64decode}}')

https://rancher.jimmyhome.tw/dashboard/?setup=admin

# To get just the bootstrap password on its own, run

root@k8s-master71u:~# kubectl get secret --namespace cattle-system bootstrap-secret -o go-template='{{.data.bootstrapPassword|base64decode}}{{ "\n" }}'

admin

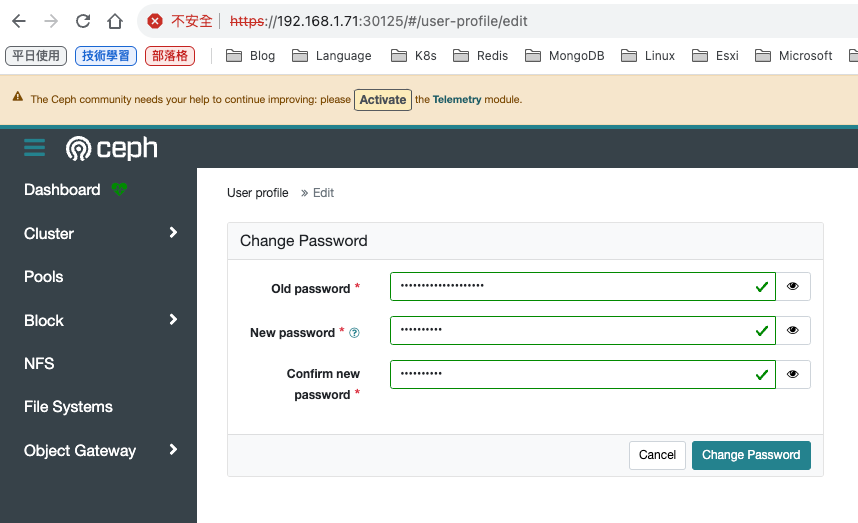

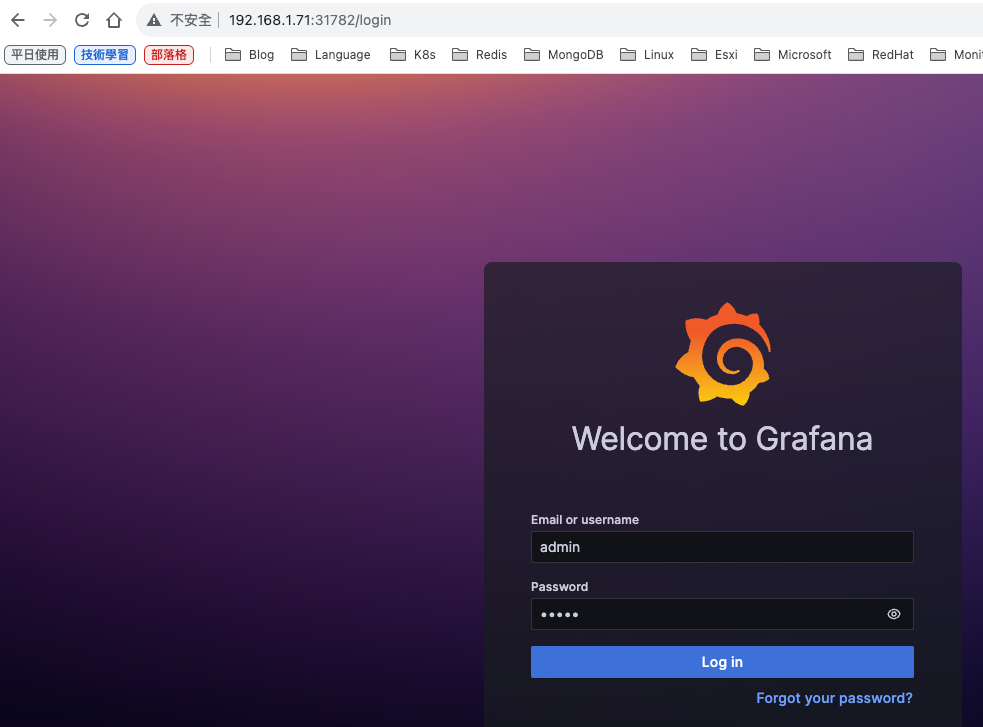

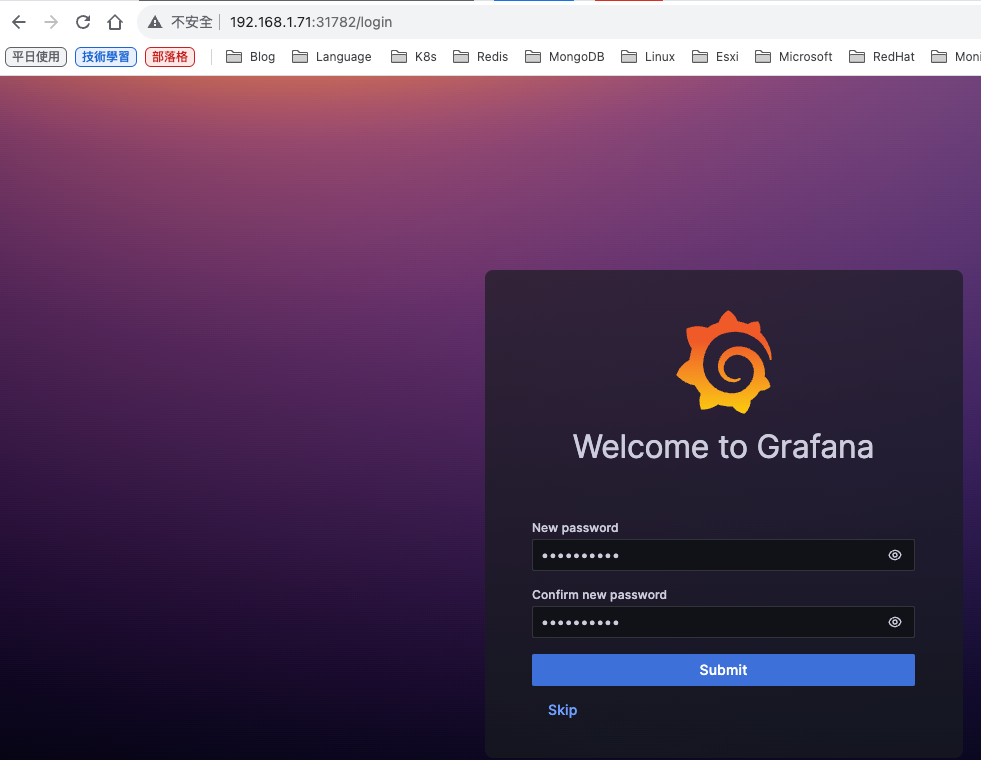

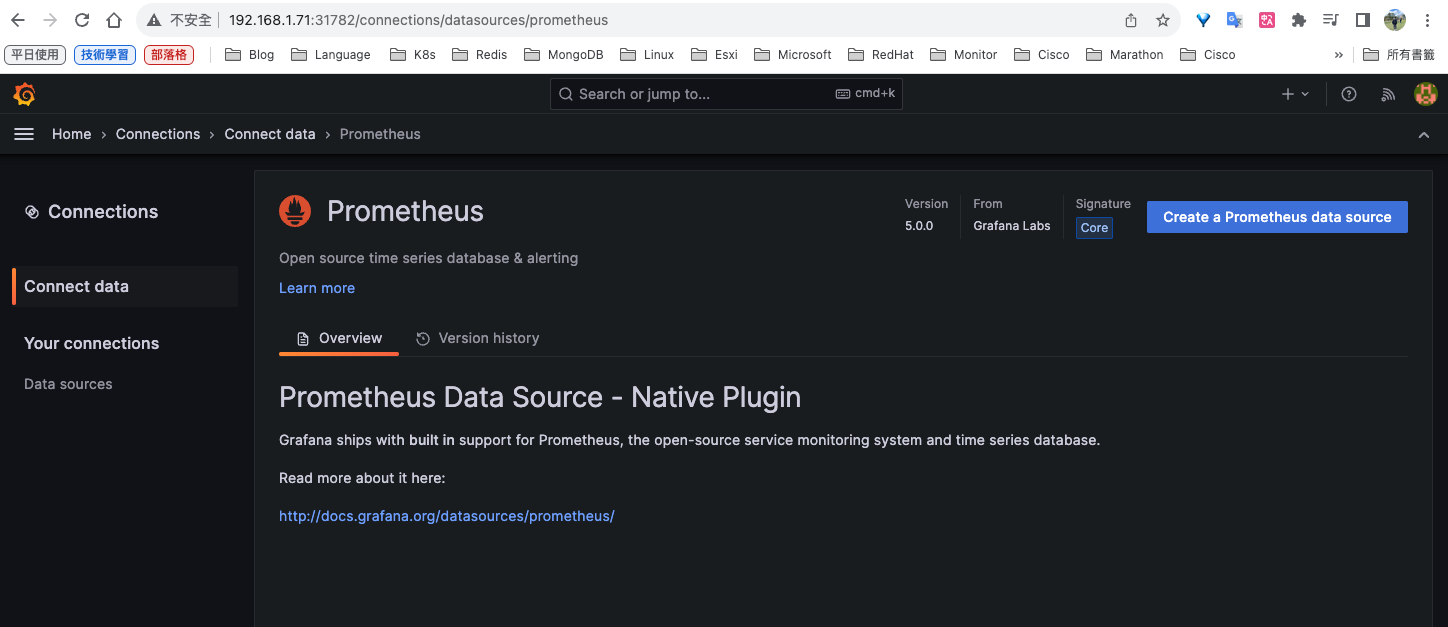

重設admin帳號的密碼

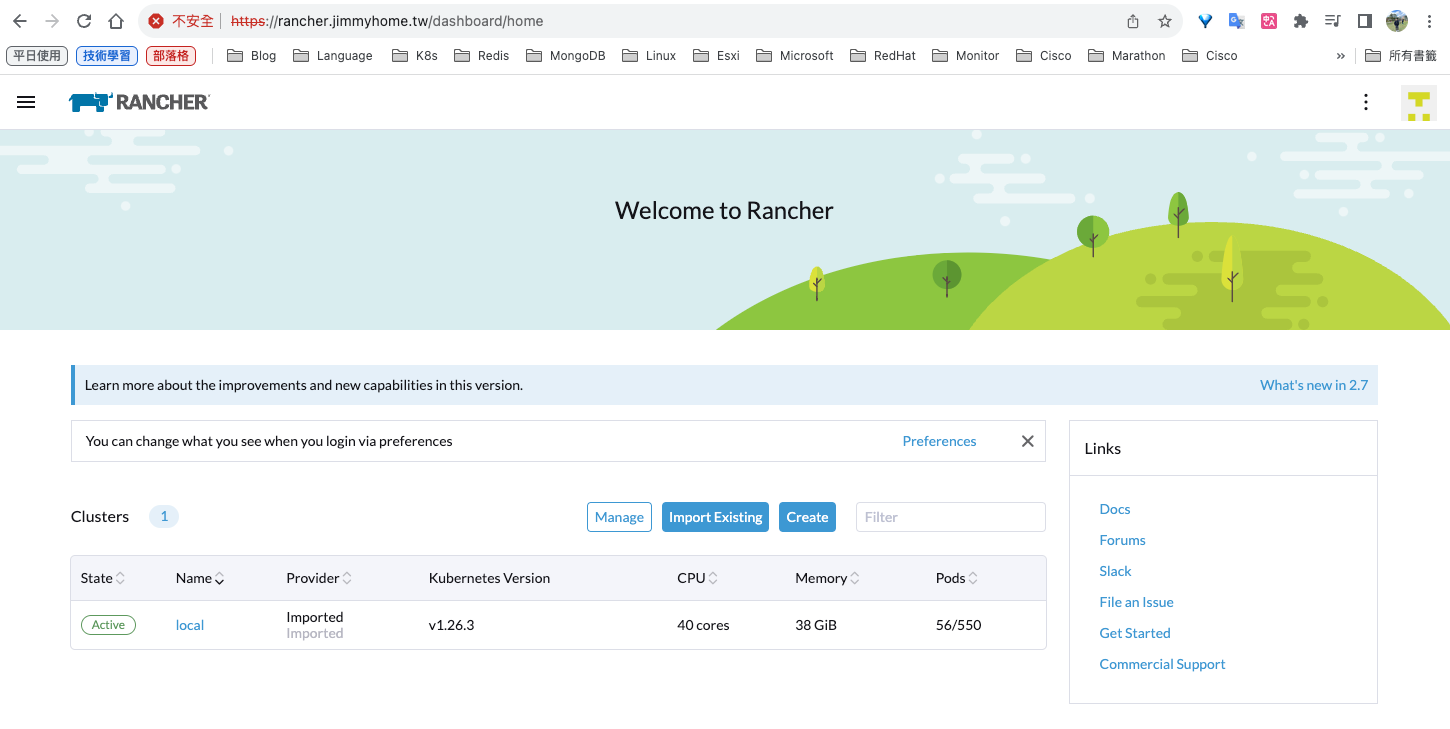

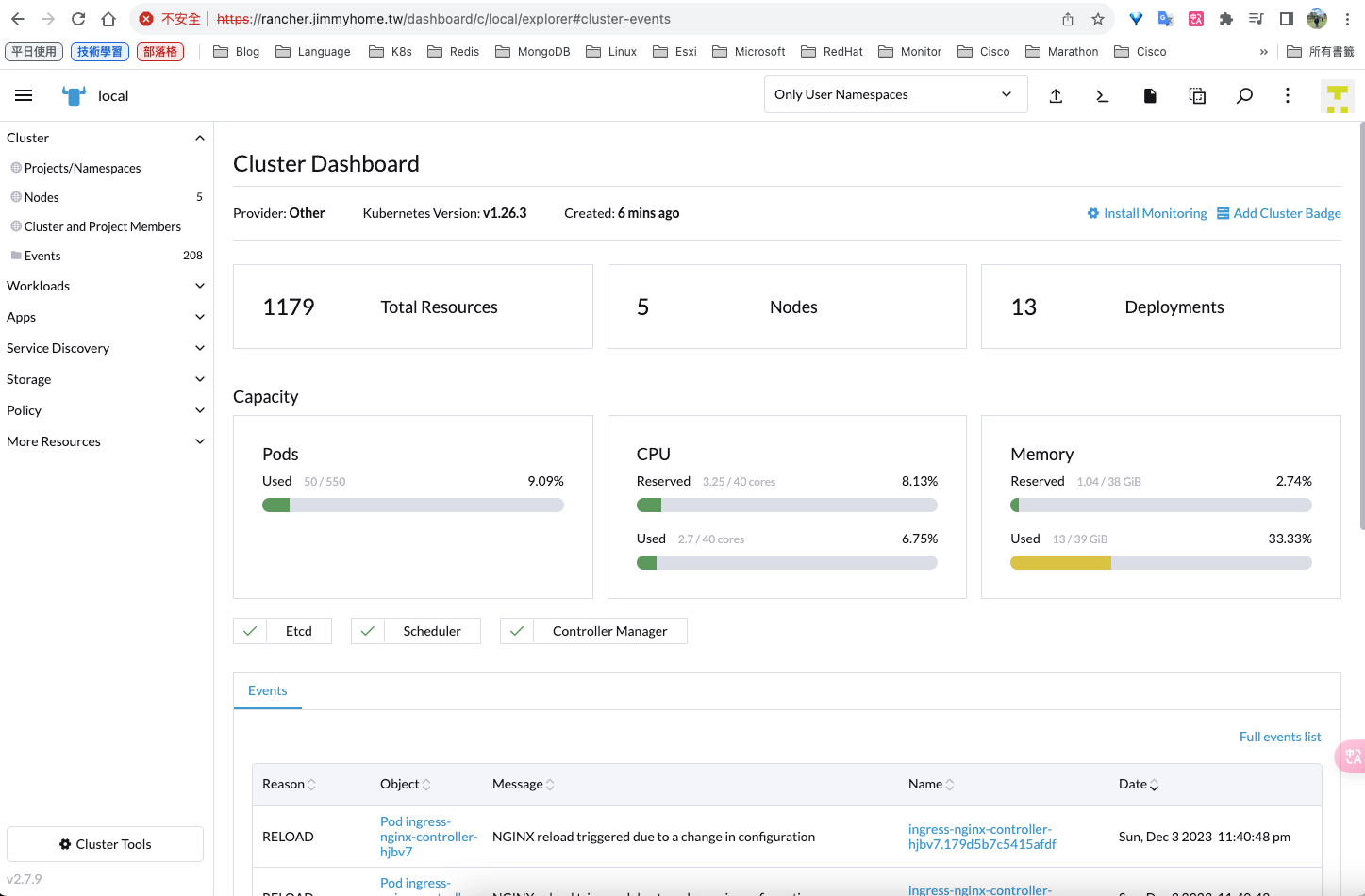

可以看到集群資訊,state為Active

進入集群,可以看到集群詳細訊息

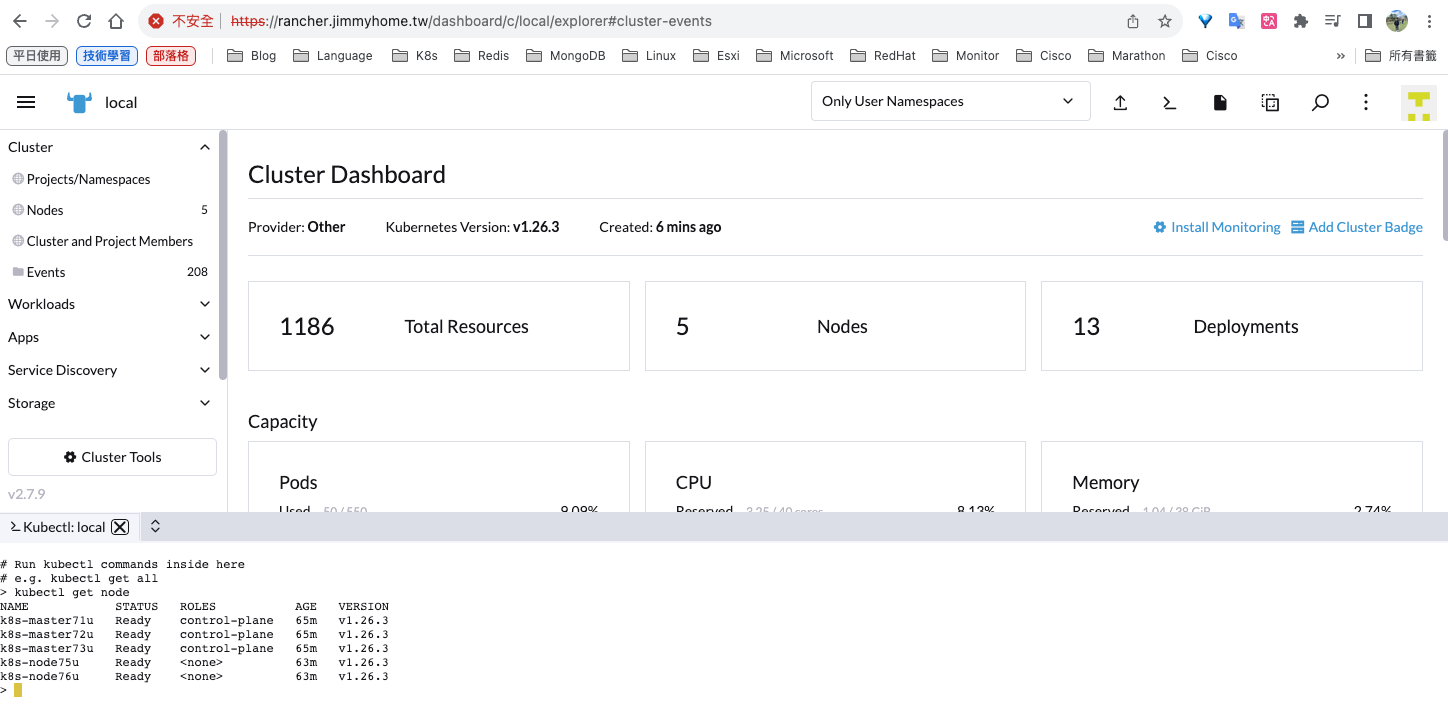

也可以使用dashboard shell去管理集群

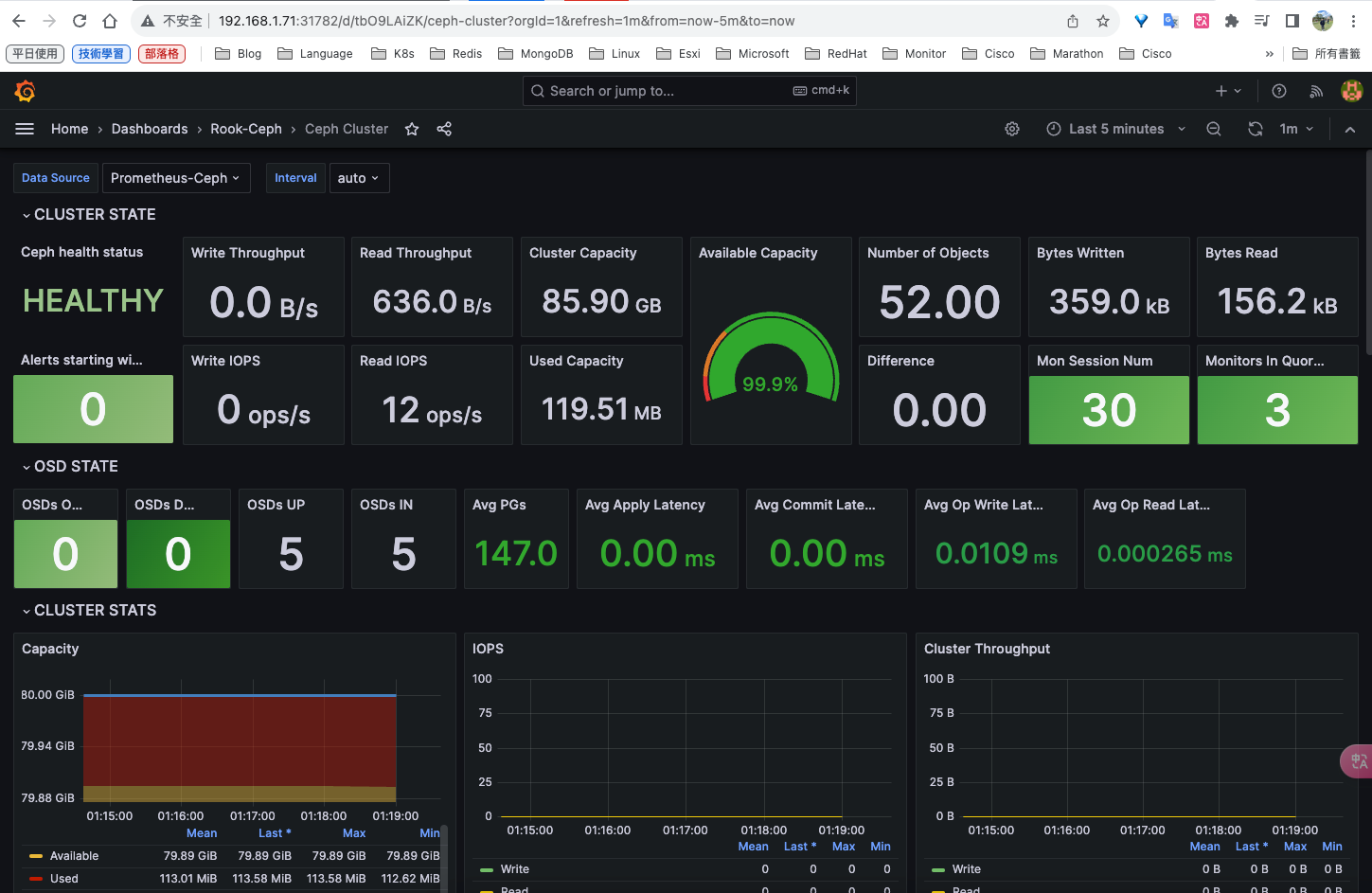

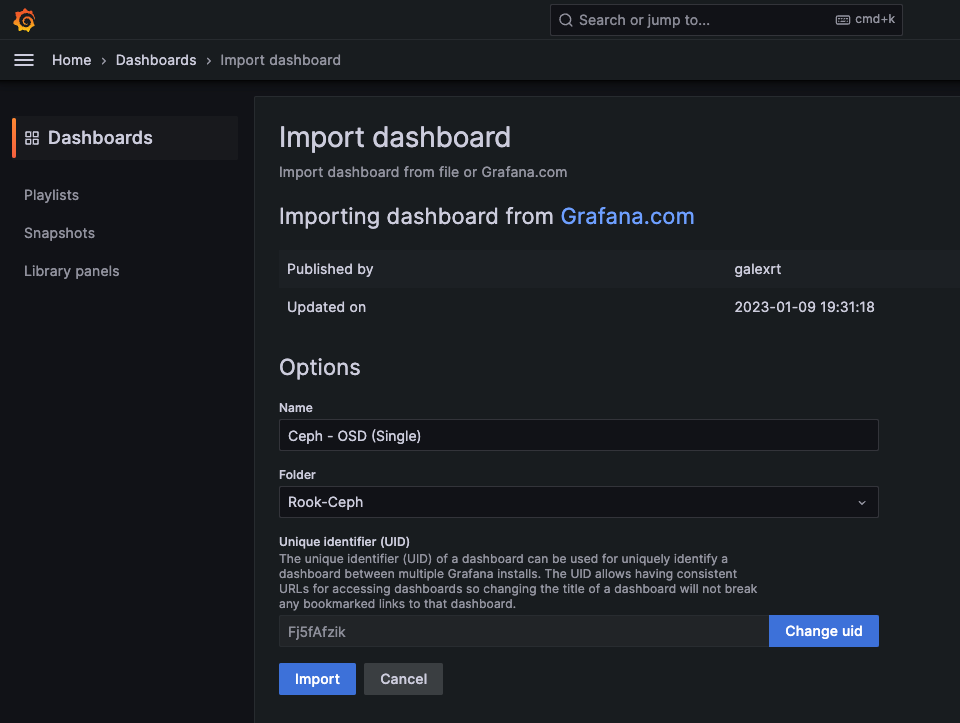

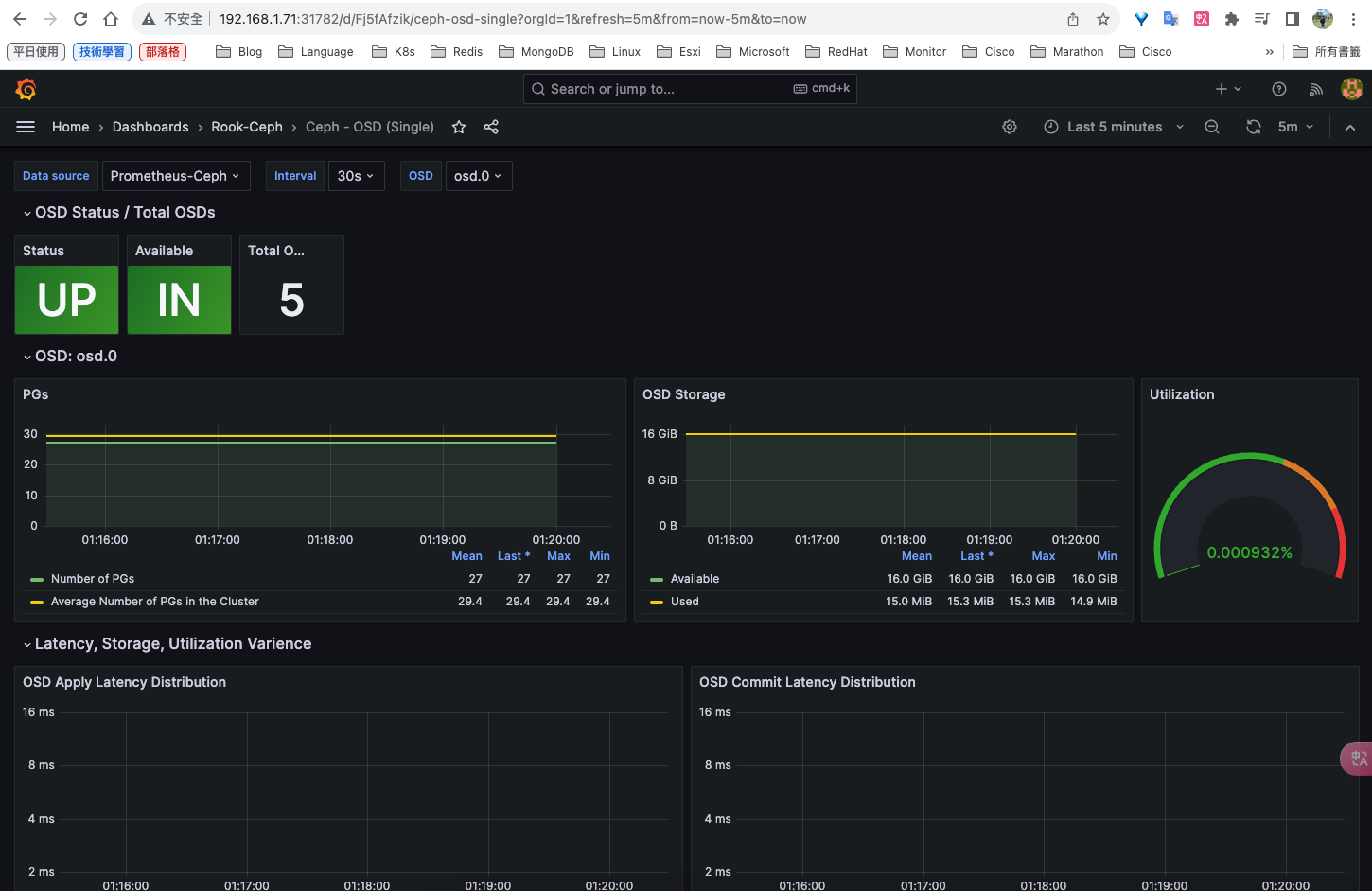

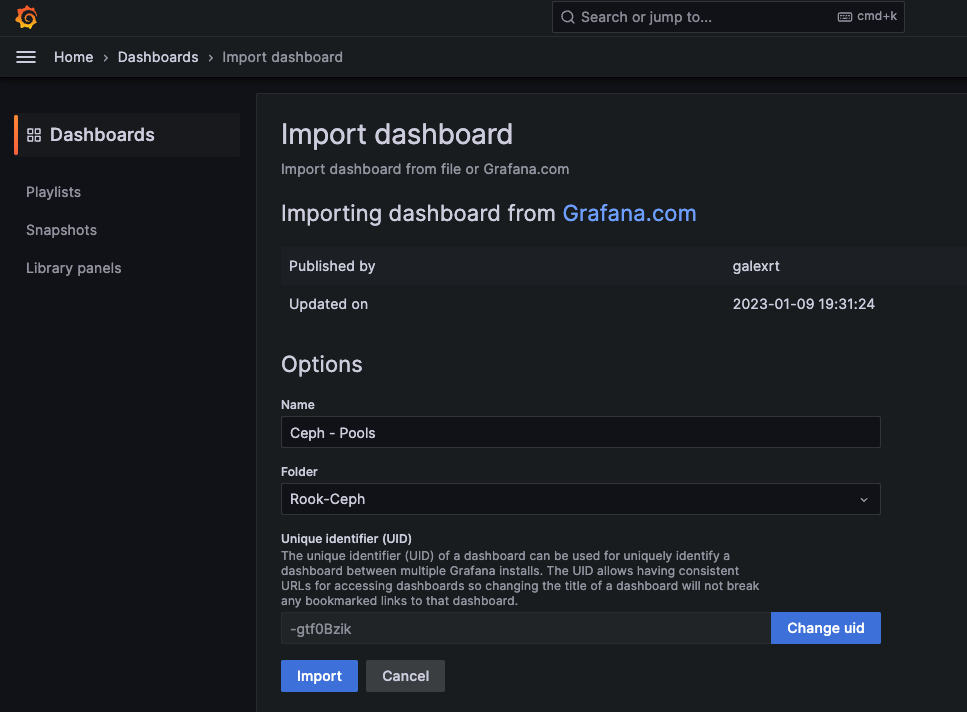

安裝rook-ceph分布式存儲系統

詳細操作可以參考我這篇: https://blog.goldfishbrain-fighting.com/2023/kubernetes-rook-ceph/

root@k8s-master71u:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

loop0 7:0 0 63.4M 1 loop /snap/core20/1974

loop1 7:1 0 63.5M 1 loop /snap/core20/2015

loop2 7:2 0 111.9M 1 loop /snap/lxd/24322

loop3 7:3 0 40.8M 1 loop /snap/snapd/20092

loop4 7:4 0 40.9M 1 loop /snap/snapd/20290

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 2G 0 part /boot

└─sda3 8:3 0 48G 0 part

└─ubuntu--vg-root

253:0 0 48G 0 lvm /var/lib/kubelet/pods/ce7174f8-ad8f-4f42-8e58-2abca8c91424/volume-subpaths/tigera-ca-bundle/calico-node/1

/

sdb 8:16 0 16G 0 disk

sr0 11:0 1 1024M 0 rom

root@k8s-master71u:~# lsblk -f

NAME FSTYPE FSVER LABEL UUID FSAVAIL FSUSE% MOUNTPOINTS

loop0

squash 4.0 0 100% /snap/core20/1974

loop1

squash 4.0 0 100% /snap/core20/2015

loop2

squash 4.0 0 100% /snap/lxd/24322

loop3

squash 4.0 0 100% /snap/snapd/20092

loop4

squash 4.0 0 100% /snap/snapd/20290

sda

├─sda1

│

├─sda2

│ xfs f681192a-1cf2-4362-a74c-745374011700 1.8G 9% /boot

└─sda3

LVM2_m LVM2 JEduSv-mV9g-tzdJ-sYEc-6piR-6nAo-48Srdh

└─ubuntu--vg-root

xfs 19cd87ec-9741-4295-9762-e87fb4f472c8 37.9G 21% /var/lib/kubelet/pods/ce7174f8-ad8f-4f42-8e58-2abca8c91424/volume-subpaths/tigera-ca-bundle/calico-node/1

/

sdb

root@k8s-master71u:~# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

loop0 7:0 0 63.4M 1 loop /snap/core20/1974

loop1 7:1 0 63.5M 1 loop /snap/core20/2015

loop2 7:2 0 111.9M 1 loop /snap/lxd/24322

loop3 7:3 0 40.8M 1 loop /snap/snapd/20092

loop4 7:4 0 40.9M 1 loop /snap/snapd/20290

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1M 0 part

├─sda2 8:2 0 2G 0 part /boot

└─sda3 8:3 0 48G 0 part

└─ubuntu--vg-root

253:0 0 48G 0 lvm /var/lib/kubelet/pods/ce7174f8-ad8f-4f42-8e58-2abca8c91424/volume-subpaths/tigera-ca-bundle/calico-node/1

/

sdb 8:16 0 16G 0 disk

sr0 11:0 1 1024M 0 rom

root@k8s-master71u:~# git clone --single-branch --branch v1.12.8 https://github.com/rook/rook.git

root@k8s-master71u:~# cd rook/deploy/examples

root@k8s-master71u:~/rook/deploy/examples# kubectl create -f crds.yaml

customresourcedefinition.apiextensions.k8s.io/cephblockpoolradosnamespaces.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephblockpools.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephbucketnotifications.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephbuckettopics.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephclients.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephclusters.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephcosidrivers.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystemmirrors.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystems.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephfilesystemsubvolumegroups.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephnfses.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectrealms.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstores.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectstoreusers.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectzonegroups.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephobjectzones.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/cephrbdmirrors.ceph.rook.io created

customresourcedefinition.apiextensions.k8s.io/objectbucketclaims.objectbucket.io created

customresourcedefinition.apiextensions.k8s.io/objectbuckets.objectbucket.io created

root@k8s-master71u:~/rook/deploy/examples# kubectl create -f common.yaml

namespace/rook-ceph created

clusterrole.rbac.authorization.k8s.io/cephfs-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/cephfs-external-provisioner-runner created

clusterrole.rbac.authorization.k8s.io/objectstorage-provisioner-role created

clusterrole.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrole.rbac.authorization.k8s.io/rbd-external-provisioner-runner created

clusterrole.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

clusterrole.rbac.authorization.k8s.io/rook-ceph-global created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrole.rbac.authorization.k8s.io/rook-ceph-mgr-system created

clusterrole.rbac.authorization.k8s.io/rook-ceph-object-bucket created

clusterrole.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrole.rbac.authorization.k8s.io/rook-ceph-system created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-nodeplugin-role created

clusterrolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/objectstorage-provisioner-role-binding created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

clusterrolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-global created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-cluster created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-object-bucket created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

clusterrolebinding.rbac.authorization.k8s.io/rook-ceph-system created

role.rbac.authorization.k8s.io/cephfs-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rbd-csi-nodeplugin created

role.rbac.authorization.k8s.io/rbd-external-provisioner-cfg created

role.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

role.rbac.authorization.k8s.io/rook-ceph-mgr created

role.rbac.authorization.k8s.io/rook-ceph-osd created

role.rbac.authorization.k8s.io/rook-ceph-purge-osd created

role.rbac.authorization.k8s.io/rook-ceph-rgw created

role.rbac.authorization.k8s.io/rook-ceph-system created

rolebinding.rbac.authorization.k8s.io/cephfs-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-nodeplugin-role-cfg created

rolebinding.rbac.authorization.k8s.io/rbd-csi-provisioner-role-cfg created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cluster-mgmt created

rolebinding.rbac.authorization.k8s.io/rook-ceph-cmd-reporter created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr created

rolebinding.rbac.authorization.k8s.io/rook-ceph-mgr-system created

rolebinding.rbac.authorization.k8s.io/rook-ceph-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-purge-osd created

rolebinding.rbac.authorization.k8s.io/rook-ceph-rgw created

rolebinding.rbac.authorization.k8s.io/rook-ceph-system created

serviceaccount/objectstorage-provisioner created

serviceaccount/rook-ceph-cmd-reporter created

serviceaccount/rook-ceph-mgr created

serviceaccount/rook-ceph-osd created

serviceaccount/rook-ceph-purge-osd created

serviceaccount/rook-ceph-rgw created

serviceaccount/rook-ceph-system created

serviceaccount/rook-csi-cephfs-plugin-sa created

serviceaccount/rook-csi-cephfs-provisioner-sa created

serviceaccount/rook-csi-rbd-plugin-sa created

serviceaccount/rook-csi-rbd-provisioner-sa created

root@k8s-master71u:~/rook/deploy/examples# kubectl create -f operator.yaml

configmap/rook-ceph-operator-config created

deployment.apps/rook-ceph-operator created

root@k8s-master71u:~/rook/deploy/examples# kubectl get deployments.apps -n rook-ceph

NAME READY UP-TO-DATE AVAILABLE AGE

rook-ceph-operator 1/1 1 1 22s

root@k8s-master71u:~/rook/deploy/examples# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

rook-ceph-operator-58775c8bdf-vmdkn 1/1 Running 0 39s

root@k8s-master71u:~/rook/deploy/examples# vim cluster.yaml

# 角色調度

placement:

mon:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-mon

operator: In

values:

- enabled

tolerations:

- effect: NoSchedule

operator: Exists

mgr:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-mgr

operator: In

values:

- enabled

tolerations:

- effect: NoSchedule

operator: Exists

osd:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-osd

operator: In

values:

- enabled

tolerations:

- effect: NoSchedule

operator: Exists

prepareosd:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-osd

operator: In

values:

- enabled

tolerations:

- effect: NoSchedule

operator: Exists

# 角色資源

resources:

mon:

limits:

cpu: "1000m"

memory: "2048Mi"

requests:

cpu: "1000m"

memory: "2048Mi"

mgr:

limits:

cpu: "1000m"

memory: "1024Mi"

requests:

cpu: "1000m"

memory: "1024Mi"

osd:

limits:

cpu: "1000m"

memory: "2048Mi"

requests:

cpu: "1000m"

memory: "2048Mi"

# 磁碟調度

nodes:

- name: "k8s-master71u"

devices:

- name: "sdb"

config:

storeType: bluestore

journalSizeMB: "4096"

- name: "k8s-master72u"

devices:

- name: "sdb"

config:

storeType: bluestore

journalSizeMB: "4096"

- name: "k8s-master73u"

devices:

- name: "sdb"

config:

storeType: bluestore

journalSizeMB: "4096"

- name: "k8s-node75u"

devices:

- name: "sdb"

config:

storeType: bluestore

journalSizeMB: "4096"

- name: "k8s-node76u"

devices:

- name: "sdb"

config:

storeType: bluestore

journalSizeMB: "4096"

# 關閉磁碟自動全部調度

storage: # cluster level storage configuration and selection

#設置磁碟的參數,調整為false,方便後面訂製

useAllNodes: false

useAllDevices: false

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master71u ceph-mon=enabled

node/k8s-master71u labeled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master72u ceph-mon=enabled

node/k8s-master72u labeled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master73u ceph-mon=enabled

node/k8s-master73u labeled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master71u ceph-mgr=enabled

node/k8s-master71u labeled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master72u ceph-mgr=enabled

node/k8s-master72u labeled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master73u ceph-mgr=enabled

node/k8s-master73u labeled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master71u ceph-osd=enabled

node/k8s-master71u labeled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master72u ceph-osd=enabled

node/k8s-master72u labeled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master73u ceph-osd=enabled

node/k8s-master73u labeled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-node75u ceph-osd=enabled

node/k8s-node75u labeled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-node76u ceph-osd=enabled

node/k8s-node76u labeled

root@k8s-master71u:~/rook/deploy/examples# kubectl create -f cluster.yaml

cephcluster.ceph.rook.io/rook-ceph created

root@k8s-master71u:~/rook/deploy/examples# kubectl get pod -n rook-ceph

NAME READY STATUS RESTARTS AGE

csi-cephfsplugin-nttgx 2/2 Running 0 9m33s

csi-cephfsplugin-provisioner-668dfcf95b-dsxdk 5/5 Running 0 9m33s

csi-cephfsplugin-provisioner-668dfcf95b-rgfv6 5/5 Running 0 9m33s

csi-cephfsplugin-s6sc9 2/2 Running 0 9m33s

csi-rbdplugin-bjv2p 2/2 Running 0 9m33s

csi-rbdplugin-provisioner-5b78f67bbb-8ts8q 5/5 Running 0 9m33s

csi-rbdplugin-provisioner-5b78f67bbb-rr5tn 5/5 Running 0 9m33s

csi-rbdplugin-xb99w 2/2 Running 0 9m33s

rook-ceph-crashcollector-k8s-master71u-55fcdbd66c-plz8m 1/1 Running 0 2m57s

rook-ceph-crashcollector-k8s-master72u-675646bf64-8tl2q 1/1 Running 0 3m18s

rook-ceph-crashcollector-k8s-master73u-84fdc469f7-9fkgh 1/1 Running 0 2m56s

rook-ceph-crashcollector-k8s-node75u-bbc794dd9-rv4pw 1/1 Running 0 11s

rook-ceph-crashcollector-k8s-node76u-5fd59cd68b-wljnj 1/1 Running 0 73s

rook-ceph-mgr-a-59f556dbc9-695bv 3/3 Running 0 3m33s

rook-ceph-mgr-b-f4c7855d-j69fj 3/3 Running 0 3m33s

rook-ceph-mon-a-b657589fb-c7c4f 2/2 Running 0 8m2s

rook-ceph-mon-b-6bdf47675f-tbhg8 2/2 Running 0 3m59s

rook-ceph-mon-c-5dcbc556d7-9zmmn 2/2 Running 0 3m48s

rook-ceph-operator-58775c8bdf-m29b4 1/1 Running 0 14m

rook-ceph-osd-0-5b8dfc978f-fwlsv 2/2 Running 0 2m58s

rook-ceph-osd-1-c9544b764-gzcmd 2/2 Running 0 2m57s

rook-ceph-osd-2-897687cc9-mrntq 2/2 Running 0 2m56s

rook-ceph-osd-3-6c7c659454-9wk9z 2/2 Running 0 73s

rook-ceph-osd-4-68dcf49f6-vcpsj 1/2 Running 0 12s

rook-ceph-osd-prepare-k8s-master71u-6fjrl 0/1 Completed 0 3m11s

rook-ceph-osd-prepare-k8s-master72u-5mcbk 0/1 Completed 0 3m10s

rook-ceph-osd-prepare-k8s-master73u-cjklw 0/1 Completed 0 3m9s

rook-ceph-osd-prepare-k8s-node75u-tk24w 0/1 Completed 0 3m8s

rook-ceph-osd-prepare-k8s-node76u-7hqrg 0/1 Completed 0 3m8s

root@k8s-master71u:~/rook/deploy/examples# kubectl create -f toolbox.yaml

deployment.apps/rook-ceph-tools created

root@k8s-master71u:~/rook/deploy/examples# kubectl get pod -n rook-ceph | grep -i tool

rook-ceph-tools-7cd4cd9c9c-54zk2 1/1 Running 0 34s

root@k8s-master71u:~/rook/deploy/examples# kubectl exec -it rook-ceph-tools-7cd4cd9c9c-54zk2 -n rook-ceph -- /bin/bash

bash-4.4$ ceph -s

cluster:

id: 9da44d3d-58d1-402d-8f8b-66bd89a869a4

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 5m)

mgr: a(active, starting, since 11s), standbys: b

osd: 5 osds: 5 up (since 93s), 5 in (since 117s)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 41 MiB used, 80 GiB / 80 GiB avail

pgs: 1 active+clean

bash-4.4$ ceph status

cluster:

id: 9da44d3d-58d1-402d-8f8b-66bd89a869a4

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 5m)

mgr: a(active, since 10s), standbys: b

osd: 5 osds: 5 up (since 104s), 5 in (since 2m)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 41 MiB used, 80 GiB / 80 GiB avail

pgs: 1 active+clean

bash-4.4$ ceph osd status

ID HOST USED AVAIL WR OPS WR DATA RD OPS RD DATA STATE

0 k8s-master71u 8788k 15.9G 0 0 0 0 exists,up

1 k8s-master72u 8272k 15.9G 0 0 0 0 exists,up

2 k8s-master73u 8788k 15.9G 0 0 0 0 exists,up

3 k8s-node76u 8404k 15.9G 0 0 0 0 exists,up

4 k8s-node75u 7888k 15.9G 0 0 0 0 exists,up

bash-4.4$ ceph df

--- RAW STORAGE ---

CLASS SIZE AVAIL USED RAW USED %RAW USED

ssd 80 GiB 80 GiB 41 MiB 41 MiB 0.05

TOTAL 80 GiB 80 GiB 41 MiB 41 MiB 0.05

--- POOLS ---

POOL ID PGS STORED OBJECTS USED %USED MAX AVAIL

.mgr 1 1 449 KiB 2 449 KiB 0 25 GiB

bash-4.4$ rados df

POOL_NAME USED OBJECTS CLONES COPIES MISSING_ON_PRIMARY UNFOUND DEGRADED RD_OPS RD WR_OPS WR USED COMPR UNDER COMPR

.mgr 449 KiB 2 0 6 0 0 0 288 494 KiB 153 1.3 MiB 0 B 0 B

total_objects 2

total_used 41 MiB

total_avail 80 GiB

total_space 80 GiB

bash-4.4$ cat /etc/ceph/ceph.conf

[global]

mon_host = 10.202.156.149:6789,10.202.75.120:6789,10.202.62.213:6789

[client.admin]

keyring = /etc/ceph/keyring

bash-4.4$ cat /etc/ceph/keyring

[client.admin]

key = AQCtp2xlw3soGRAAVvoyqWe8wzvkkt26JQEGtQ==

bash-4.4$ exit

root@k8s-master71u:~/rook/deploy/examples# apt update

root@k8s-master71u:~/rook/deploy/examples# apt install ceph-common

root@k8s-master71u:~/rook/deploy/examples# vim /etc/ceph/ceph.conf

[global]

mon_host = 10.202.156.149:6789,10.202.75.120:6789,10.202.62.213:6789

[client.admin]

keyring = /etc/ceph/keyring

root@k8s-master71u:~/rook/deploy/examples# vim /etc/ceph/keyring

[client.admin]

key = AQCtp2xlw3soGRAAVvoyqWe8wzvkkt26JQEGtQ==

root@k8s-master71u:~/rook/deploy/examples# ceph -s

cluster:

id: 9da44d3d-58d1-402d-8f8b-66bd89a869a4

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 9m)

mgr: a(active, since 4m), standbys: b

osd: 5 osds: 5 up (since 6m), 5 in (since 6m)

data:

pools: 1 pools, 1 pgs

objects: 2 objects, 449 KiB

usage: 41 MiB used, 80 GiB / 80 GiB avail

pgs: 1 active+clean

cephfs

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master71u ceph-mds=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master72u ceph-mds=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-master73u ceph-mds=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-node75u ceph-mds=enabled

root@k8s-master71u:~/rook/deploy/examples# kubectl label node k8s-node76u ceph-mds=enabled

root@k8s-master71u:~/rook/deploy/examples# vim filesystem.yaml

activeCount: 2

placement:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: ceph-mds

operator: In

values:

- enabled

tolerations:

- effect: NoSchedule

operator: Exists

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- rook-ceph-mds

topologyKey: kubernetes.io/hostname

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- rook-ceph-mds

topologyKey: topology.kubernetes.io/zone

root@k8s-master71u:~/rook/deploy/examples# kubectl create -f filesystem.yaml

root@k8s-master71u:~/rook/deploy/examples# kubectl get pod -n rook-ceph -o wide | grep -i rook-ceph-mds

rook-ceph-mds-myfs-a-fcf6b94fb-wskkq 2/2 Running 0 18s 10.201.126.14 k8s-master72u <none> <none>

rook-ceph-mds-myfs-b-55d97767d4-z872h 2/2 Running 0 16s 10.201.255.227 k8s-node76u <none> <none>

rook-ceph-mds-myfs-c-5c9c94fbfb-chfnv 2/2 Running 0 13s 10.201.14.164 k8s-node75u <none> <none>

rook-ceph-mds-myfs-d-69cc457f9b-hq7vv 2/2 Running 0 10s 10.201.133.13 k8s-master71u <none> <none>

root@k8s-master71u:~/rook/deploy/examples# ceph -s

cluster:

id: 9da44d3d-58d1-402d-8f8b-66bd89a869a4

health: HEALTH_OK

services:

mon: 3 daemons, quorum a,b,c (age 13m)

mgr: a(active, since 8m), standbys: b

mds: 2/2 daemons up, 2 hot standby

osd: 5 osds: 5 up (since 9m), 5 in (since 10m)

data:

volumes: 1/1 healthy

pools: 3 pools, 3 pgs

objects: 41 objects, 452 KiB

usage: 43 MiB used, 80 GiB / 80 GiB avail

pgs: 3 active+clean

io:

client: 2.2 KiB/s rd, 2.4 KiB/s wr, 2 op/s rd, 8 op/s wr

root@k8s-master71u:~/rook/deploy/examples# cd csi/cephfs

root@k8s-master71u:~/rook/deploy/examples/csi/cephfs# kubectl create -f storageclass.yaml

storageclass.storage.k8s.io/rook-cephfs created

root@k8s-master71u:~/rook/deploy/examples/csi/cephfs# kubectl get storageclasses.storage.k8s.io

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

rook-cephfs rook-ceph.cephfs.csi.ceph.com Delete Immediate true 5s

root@k8s-master71u:~/rook/deploy/examples/csi/cephfs# ceph fs ls

name: myfs, metadata pool: myfs-metadata, data pools: [myfs-replicated ]

驗證pvc,pc使用storageClassName: “rook-cephfs"自動生成

root@k8s-master71u:~# cat sc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-sc

spec:

selector:

matchLabels:

app: nginx

replicas: 3

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html

volumes:

- name: wwwroot

persistentVolumeClaim:

claimName: web-sc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: web-sc

spec:

storageClassName: "rook-cephfs"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

root@k8s-master71u:~# kubectl create -f sc.yaml

root@k8s-master71u:~# kubectl get pod,pvc,pv

NAME READY STATUS RESTARTS AGE

pod/test-nginx 1/1 Running 2 (33m ago) 81m

pod/web-sc-7b6c54fbb9-55rtb 1/1 Running 0 34s

pod/web-sc-7b6c54fbb9-fxx6r 1/1 Running 0 34s

pod/web-sc-7b6c54fbb9-vlpwm 1/1 Running 0 34s

pod/web2-5d48fb75c5-dt5xd 1/1 Running 2 (33m ago) 80m

pod/web2-5d48fb75c5-ggmrz 1/1 Running 2 (33m ago) 80m

pod/web2-5d48fb75c5-jsvck 1/1 Running 2 (33m ago) 80m

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/web-sc Bound pvc-ec58bbde-7e2c-4cc4-be13-223d10de5d99 5Gi RWX rook-cephfs 34s

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-ec58bbde-7e2c-4cc4-be13-223d10de5d99 5Gi RWX Delete Bound default/web-sc rook-cephfs 34s

root@k8s-master71u:~# kubectl exec -ti web-sc-7b6c54fbb9-55rtb -- /bin/bash

root@web-sc-7b6c54fbb9-55rtb:/#

root@web-sc-7b6c54fbb9-55rtb:/# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 48G 20G 29G 41% /

tmpfs 64M 0 64M 0% /dev

/dev/mapper/ubuntu--vg-root 48G 20G 29G 41% /etc/hosts

shm 64M 0 64M 0% /dev/shm

10.202.75.120:6789,10.202.62.213:6789,10.202.156.149:6789:/volumes/csi/csi-vol-39f4781c-a08b-4b30-a2f9-5d244cd4f7af/58cdd551-8197-41f0-875b-a050fb0135aa 5.0G 0 5.0G 0% /usr/share/nginx/html

tmpfs 7.7G 12K 7.7G 1% /run/secrets/kubernetes.io/serviceaccount

tmpfs 3.9G 0 3.9G 0% /proc/acpi

tmpfs 3.9G 0 3.9G 0% /proc/scsi

tmpfs 3.9G 0 3.9G 0% /sys/firmware

root@web-sc-7b6c54fbb9-55rtb:/#

root@web-sc-7b6c54fbb9-55rtb:/# cd /usr/share/nginx/html/

root@web-sc-7b6c54fbb9-55rtb:/usr/share/nginx/html# touch 123

root@web-sc-7b6c54fbb9-55rtb:/usr/share/nginx/html# ls

123

root@web-sc-7b6c54fbb9-55rtb:/usr/share/nginx/html# exit

exit

root@k8s-master71u:~# kubectl exec -ti web-sc-7b6c54fbb9-fxx6r -- /bin/bash

root@web-sc-7b6c54fbb9-fxx6r:/#

root@web-sc-7b6c54fbb9-fxx6r:/# df -h

Filesystem Size Used Avail Use% Mounted on

overlay 48G 20G 29G 41% /

tmpfs 64M 0 64M 0% /dev

/dev/mapper/ubuntu--vg-root 48G 20G 29G 41% /etc/hosts

shm 64M 0 64M 0% /dev/shm

10.202.75.120:6789,10.202.62.213:6789,10.202.156.149:6789:/volumes/csi/csi-vol-39f4781c-a08b-4b30-a2f9-5d244cd4f7af/58cdd551-8197-41f0-875b-a050fb0135aa 5.0G 0 5.0G 0% /usr/share/nginx/html